- 免费的Kubernetes在线实验平台介绍2(官网提供的在线系统)

- 安装kubernetes方法

- 通过kubeadm方式在centos7.6上安装kubernetes v1.14.2集群

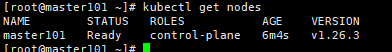

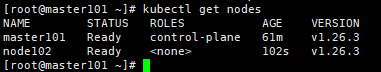

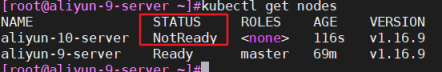

- 通过kudeadm方式在centos7上安装kubernetes v1.26.3集群

- 二进制安装部署kubernetes集群-->建议首先部署docker

- 组件版本和配置策略

- 系统初始化

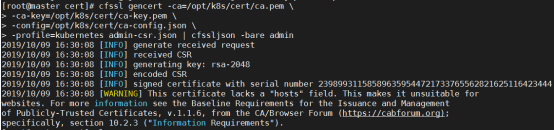

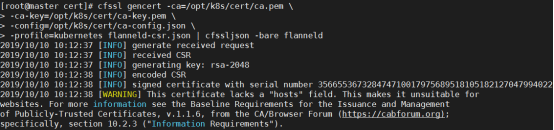

- 创建 CA 证书和秘钥

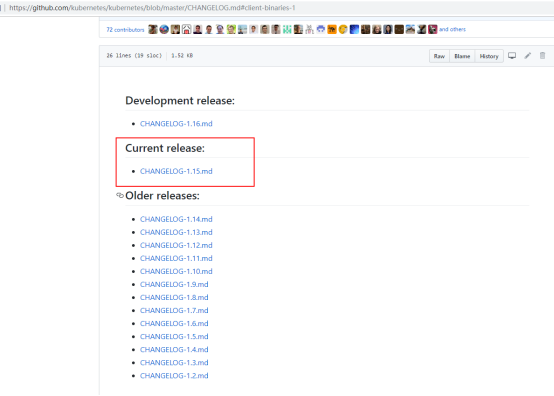

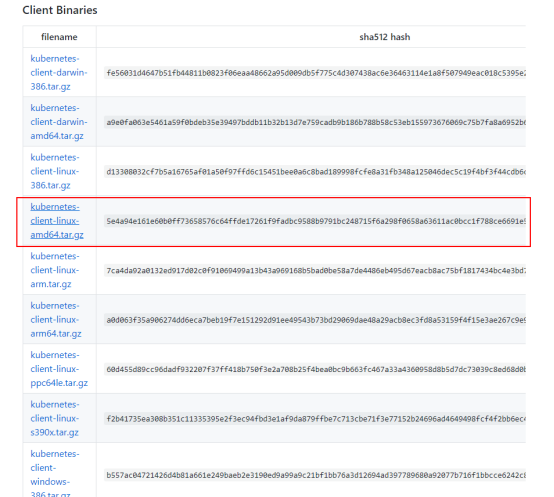

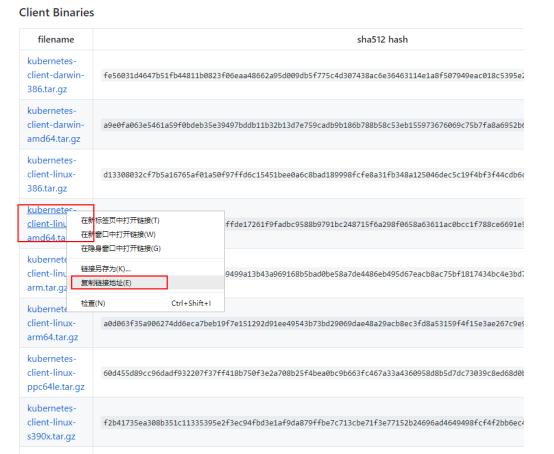

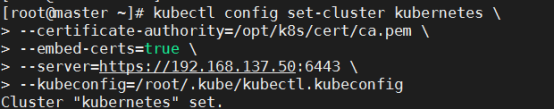

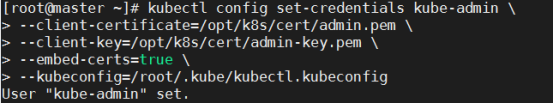

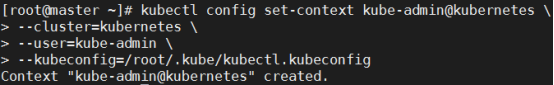

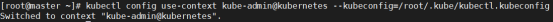

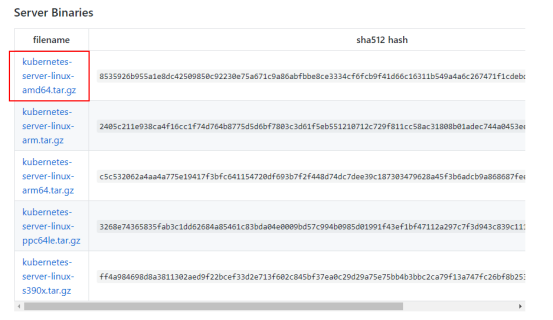

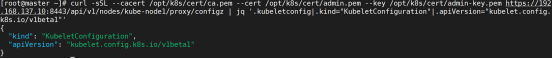

- 部署 kubectl 命令行工具

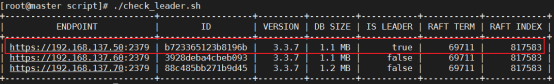

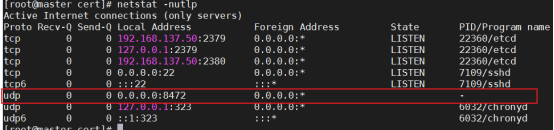

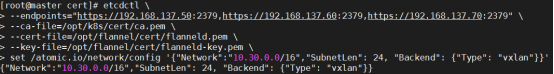

- 部署 etcd 集群

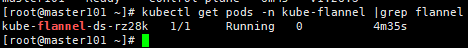

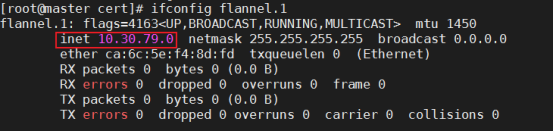

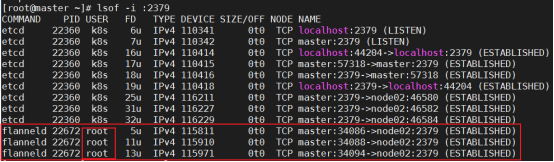

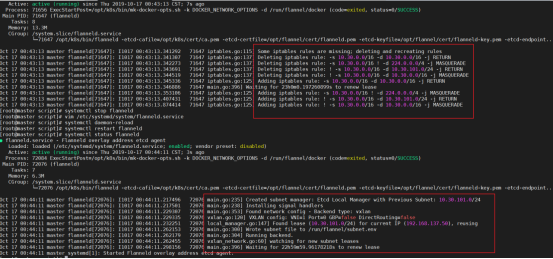

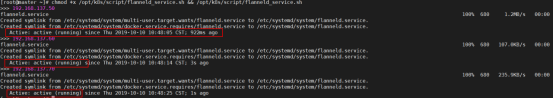

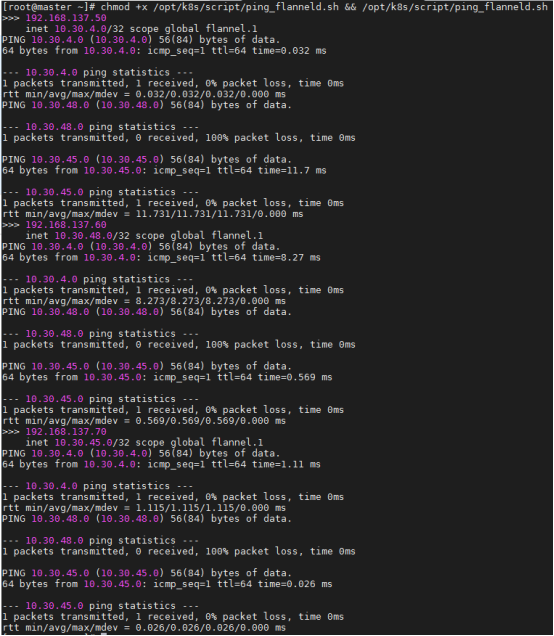

- 部署 flannel 网络(UDP:8472)

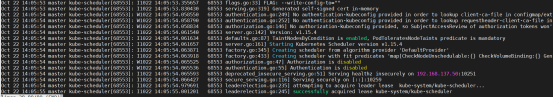

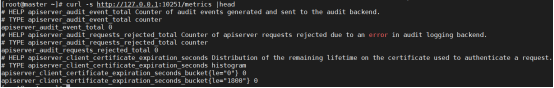

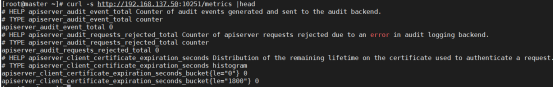

- 部署 master 节点

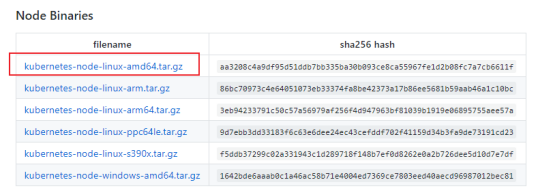

- 部署 worker 节点--->建议首先部署docker

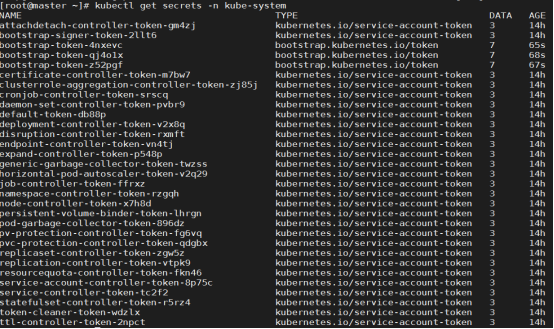

- 验证集群功能

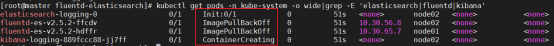

- 部署集群插件

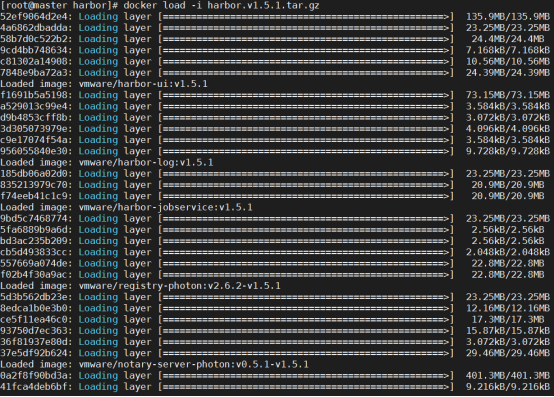

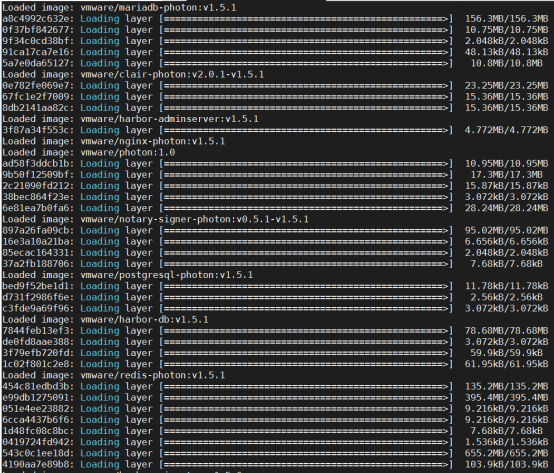

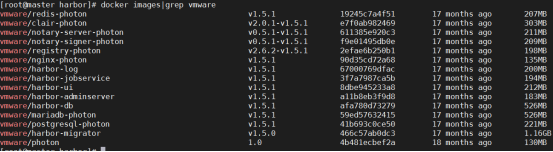

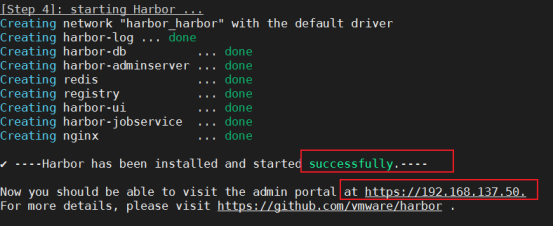

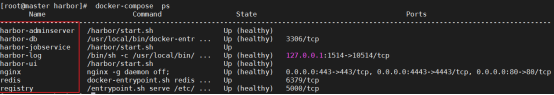

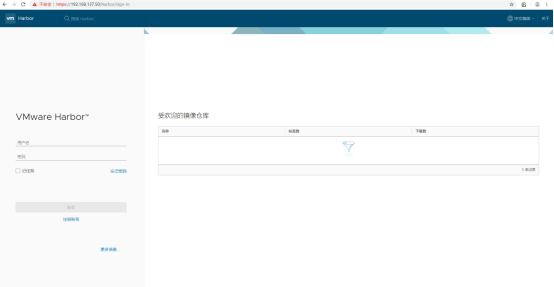

- 部署 harbor 私有仓库

- 清理集群

- 二进制部署kubernetes多主多从集群

免费的Kubernetes在线实验平台介绍2(官网提供的在线系统)

现在我们已经了解了Kubernetes核心概念的基本知识,你可以进一步阅读Kubernetes 用户手册。用户手册提供了快速并且完备的学习文档。

如果迫不及待想要试试Kubernetes,可以使用Google Container Engine。Google Container Engine是托管的Kubernetes容器环境。简单注册/登录之后就可以在上面尝试示例了。

Play with Kubernetes 介绍

博客参考:

https://www.hangge.com/blog/cache/detail_2420.html

https://www.hangge.com/blog/cache/detail_2426.html

PWK 官网地址:https://labs.play-with-k8s.com/

(1)Play with Kubernetes一个提供了在浏览器中使用免费CentOS Linux虚拟机的体验平台,其内部实际上是Docker-in-Docker(DinD)技术模拟了多虚拟机/PC 的效果。

(2)Play with Kubernetes平台有如下几个特色:

-

允许我们使用 github 或 dockerhub 账号登录

-

在登录后会开始倒计时,让我们有 4 小时的时间去实践

-

K8s 环境使用 kubeadm 来部署(使用用 weave 网络)

-

平台共提供 5 台 centos7 设备供我们使用(docker 版本为 17.09.0-ce)

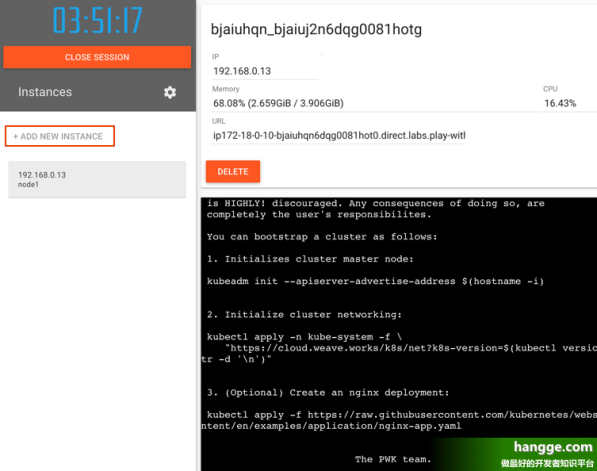

(1)首先访问其网站,并使用github 或dockerhub 账号进行登录。

(2)登录后点击页面上的Start 按钮,我们便拥有一个自己的实验室环境

(3)单击左侧的"Add New Instance" 来创建第一个Kubernetes 集群节点。它会自动将其命名为"node1",这个将作为我们群集的主节点

(4)由于刚创建的主节点IP 是192.168.0.13,因此我们执行如下命令进行初始化:

kubeadm init --apiserver-advertise-address 192.168.0.13 --pod-network-cidr=10.244.0.0/16

(5)初始化完毕完成之后,界面上会显示kubeadm join命令,这个用于后续node 节点加入集群使用,需要牢记

(6)接着还需要执行如下命令安装Pod 网络(这里我们使用flannel),否则Pod 之间无法通信。

kubectl apply -f https://raw.githubusercontent.com/coreos/flannel/master/Documentation/kube-flannel.yml

(7)最后我们执行kubectl get nodes 查看节点状态,可以看到目前只有一个Master 节点

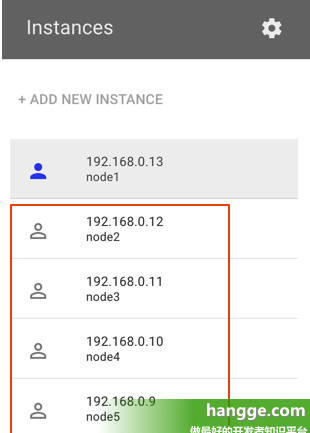

(8)我们单击左侧的"Add New Instance"按钮继续创建4个节点作为node 节点

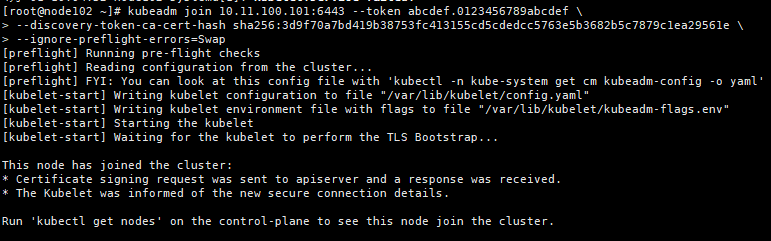

(9)这4个节点都执行类似如下的kubeadm join命令加入集群(即之前master 节点初始化完成后红框部分内容)

kubeadm join 192.168.0.13:6443 --token opip9p.rh35kkvqzwjizely --discovery-token-ca-cert-hash sha256:9252e13d2ffd3569c40b02c477f59038fac39aade9e99f282a333c0f8c5d7b22

(10)最后我们在主节点执行kubectl get nodes查看节点状态,可以看到一个包含有5 个节点集群已经部署成功了

安装kubernetes方法

方法1:使用kubeadm 安装kubernetes(本文演示的就是此方法)

-

优点:你只要安装

kubeadm即可;kubeadm会帮你自动部署安装K8S集群;如:初始化K8S集群、配置各个插件的证书认证、部署集群网络等;安装简易。 -

缺点:不是自己一步一步安装,可能对K8S的理解不会那么深;并且有那一部分有问题,自己不好修正。

方法2:二进制安装部署kubernetes(详见下篇kubernetes系列04--二进制安装部署kubernetes集群)

-

优点:K8S集群所有东西,都由自己一手安装搭建;清晰明了,更加深刻细节的掌握K8S;哪里出错便于快速查找验证。

-

缺点:安装较为繁琐麻烦,且易于出错

通过kubeadm方式在centos7.6上安装kubernetes v1.14.2集群

https://www.qikqiak.com/post/use-kubeadm-install-kubernetes-1.15.3/

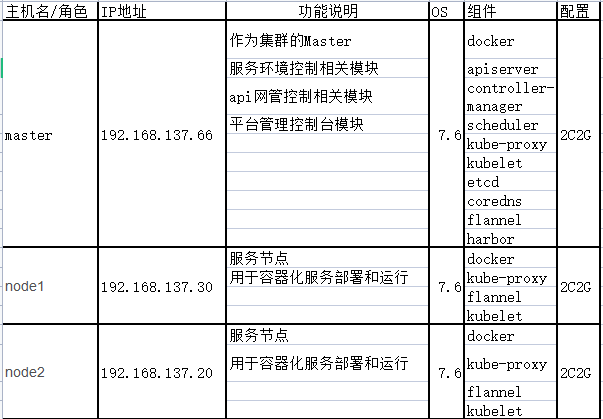

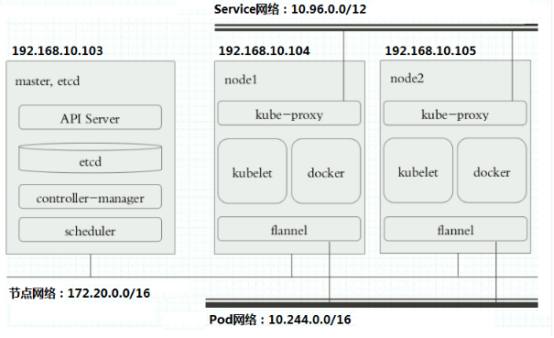

kubeadm部署3节点kubernetes1.13.0集群(master节点x1,node节点x2)

集群部署博客参考:

https://www.hangge.com/blog/cache/detail_2414.html

https://blog.csdn.net/networken/article/details/84991940

https://zhuyasen.com/post/k8s.html

节点详细信息

K8S搭建安装示意图

一、Docker安装

所有节点(master and node)都需要安装docker,部署的是Docker version 19.03.2

二、k8s安装准备工作

安装Centos是已经禁用了防火墙和selinux并设置了阿里源。master和node节点都执行本部分操作

1. 配置主机

1.1 修改主机名(主机名不能有下划线)

[root@centos7 ~]# hostnamectl set-hostname master

[root@centos7 ~]# cat /etc/hostname

master

退出重新登陆即可显示新设置的主机名master

1.2 修改hosts文件

[root@master ~]# cat >> /etc/hosts << EOF

192.168.137.66 master

192.168.137.30 node01

192.168.137.20 node02

EOF

2. 同步系统时间

$ yum -y install ntpdate && ntpdate time1.aliyun.com

$ crontab -e #写入定时任务

1 */2 * * * /usr/sbin/ntpdate time1.aliyun.com

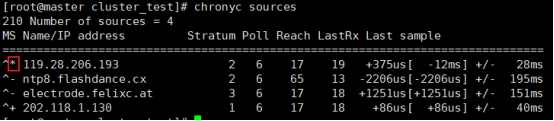

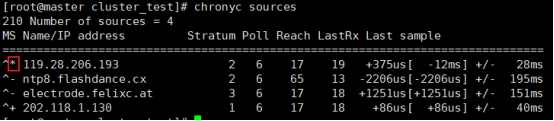

centos7默认已启用chrony服务,执行chronyc sources命令,查看存在以*开头的行,说明已经与NTP服务器时间同步

3. 验证mac地址uuid

[root@master ~]# cat /sys/class/net/ens33/address

[root@master ~]# cat /sys/class/dmi/id/product_uuid

保证各节点mac和uuid唯一

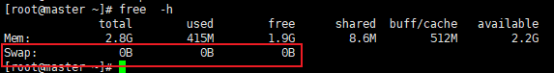

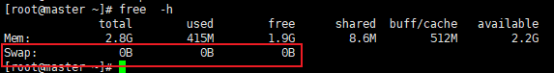

4. 禁用swap

为什么要关闭swap交换分区?

Swap是交换分区,如果机器内存不够,会使用swap分区,但是swap分区的性能较低,k8s设计的时候为了能提升性能,默认是不允许使用交换分区的。Kubeadm初始化的时候会检测swap是否关闭,如果没关闭,那就初始化失败。如果不想要关闭交换分区,安装k8s的时候可以指定--ignore-preflight-errors=Swap来解决

解决主机重启后kubelet无法自动启动问题:https://www.hangge.com/blog/cache/detail_2419.html

由于K8s必须保持全程关闭交换内存,之前我安装是只是使用swapoff -a 命令暂时关闭swap。而机器重启后,swap 还是会自动启用,从而导致kubelet无法启动

3.1 临时禁用

[root@master ~]# swapoff -a

3.2 永久禁用

若需要重启后也生效,在禁用swap后还需修改配置文件/etc/fstab,注释swap

[root@master ~]# sed -i.bak '/swap/s/^/#/' /etc/fstab

或者修改内核参数,关闭swap

echo "vm.swappiness = 0" >> /etc/sysctl.conf

swapoff -a && swapon -a && sysctl -p

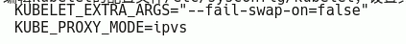

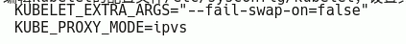

或者:Swap的问题:要么关闭swap,要么忽略swap

[root@elk-node-1 ~]# vim /etc/sysconfig/kubelet

KUBELET_EXTRA_ARGS="--fail-swap-on=false"

5. 关闭防火墙、SELinux

在每台机器上关闭防火墙:

① 关闭服务,并设为开机不自启

$ sudo systemctl stop firewalld

$ sudo systemctl disable firewalld

② 清空防火墙规则

$ sudo iptables -F && sudo iptables -X && sudo iptables -F -t nat && sudo iptables -X -t nat

$ sudo iptables -P FORWARD ACCEPT

-F 是清空指定某个 chains 内所有的 rule 设定

-X 是删除使用者自定 iptables 项目

1、关闭 SELinux,否则后续 K8S 挂载目录时可能报错 Permission denied :

$ sudo setenforce 0

2、修改配置文件,永久生效;

sed -i 's/^SELINUX=enforcing$/SELINUX=disabled/' /etc/selinux/config

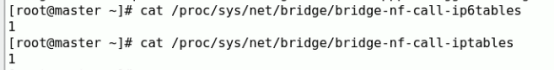

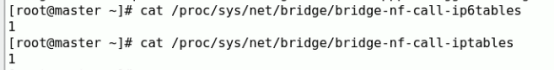

6. 内核参数修改

开启 bridge-nf-call-iptables,如果 Kubernetes 环境的网络链路中走了 bridge 就可能遇到上述 Service 同节点通信问题,而 Kubernetes 很多网络实现都用到了 bridge。

启用 bridge-nf-call-iptables 这个内核参数 (置为 1),表示 bridge 设备在二层转发时也去调用 iptables 配置的三层规则 (包含 conntrack),所以开启这个参数就能够解决上述 Service 同节点通信问题,这也是为什么在 Kubernetes 环境中,大多都要求开启 bridge-nf-call-iptables 的原因。

RHEL / CentOS 7上的一些用户报告了由于iptables被绕过而导致流量路由不正确的问题

WARNING: bridge-nf-call-iptables is disabled

WARNING: bridge-nf-call-ip6tables is disabled

解决上面的警告:打开iptables内生的桥接相关功能,已经默认开启了,没开启的自行开启(1表示开启,0表示未开启)

# cat /proc/sys/net/bridge/bridge-nf-call-ip6tables

1

# cat /proc/sys/net/bridge/bridge-nf-call-iptables

1

4.1 自动开启桥接功能

临时修改

[root@master ~]# sysctl net.bridge.bridge-nf-call-iptables=1

net.bridge.bridge-nf-call-iptables = 1

[root@master ~]# sysctl net.bridge.bridge-nf-call-ip6tables=1

net.bridge.bridge-nf-call-ip6tables = 1

[root@master ~]# sysctl net.ipv4.ip_forward=1

net.ipv4.ip_forward = 1

或者用echo也行:

echo 1 > /proc/sys/net/bridge/bridge-nf-call-iptables

echo 1 > /proc/sys/net/bridge/bridge-nf-call-ip6tables

4.2 永久修改

[root@master ~]# cat <<EOF > /etc/sysctl.d/k8s.conf

net.bridge.bridge-nf-call-ip6tables = 1

net.bridge.bridge-nf-call-iptables = 1

net.ipv4.ip_forward = 1

EOF

[root@master ~]# sysctl -p /etc/sysctl.d/k8s.conf

net.ipv4.ip_forward = 1 #Docker从1.13版本开始调整了默认的防火墙规则,禁用了iptables filter表中FOWARD链,导致pod无法通信

# 应用 sysctl 参数而不重新启动

sudo sysctl --system

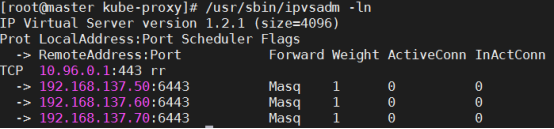

7. 加载ipvs相关模块

由于ipvs已经加入到了内核的主干,所以为kube-proxy开启ipvs的前提需要加载以下的内核模块:

ip_vs

ip_vs_rr

ip_vs_wrr

ip_vs_sh

nf_conntrack_ipv4

在所有的Kubernetes节点执行以下脚本:

cat > /etc/sysconfig/modules/ipvs.modules <<EOF

#!/bin/bash

modprobe -- ip_vs

modprobe -- ip_vs_rr

modprobe -- ip_vs_wrr

modprobe -- ip_vs_sh

modprobe -- nf_conntrack_ipv4

EOF

执行脚本

[root@master ~]# chmod 755 /etc/sysconfig/modules/ipvs.modules && bash /etc/sysconfig/modules/ipvs.modules && lsmod | grep -e ip_vs -e nf_conntrack_ipv4

上面脚本创建了/etc/sysconfig/modules/ipvs.modules文件,保证在节点重启后能自动加载所需模块。 使用lsmod | grep -e ip_vs -e nf_conntrack_ipv4命令查看是否已经正确加载所需的内核模块。

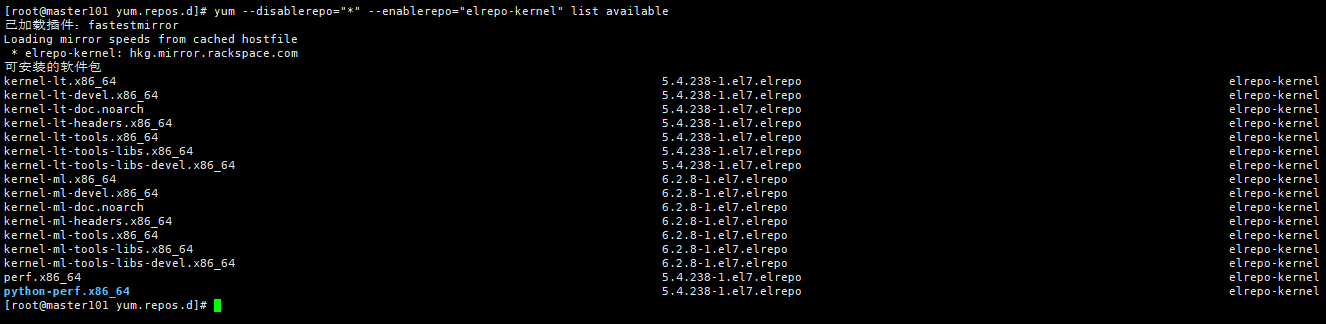

进行配置时会报错modprobe: FATAL: Module nf_conntrack_ipv4 not found.

这是因为使用了高内核,一般教程都是3.2的内核。在高版本内核已经把nf_conntrack_ipv4替换为nf_conntrack了

接下来还需要确保各个节点上已经安装了ipset软件包。 为了便于查看ipvs的代理规则,最好安装一下管理工具ipvsadm。

ipset是iptables的扩展,可以让你添加规则来匹配地址集合。不同于常规的iptables链是线性的存储和遍历,ipset是用索引数据结构存储,甚至对于大型集合,查询效率非常都优秀。

# yum install ipset ipvsadm -y

8. 修改Cgroup Driver

5.1 修改daemon.json

# docker info | grep -i cgroup #默认是cgroupfs

Cgroup Driver: cgroupfs

修改daemon.json,新增: "exec-opts": ["native.cgroupdriver=systemd"]

[root@master ~]# vim /etc/docker/daemon.json

{

"oom-score-adjust": -1000,

"exec-opts": ["native.cgroupdriver=systemd"],

"log-driver": "json-file",

"log-opts": {

"max-size": "100m",

"max-file": "3"

},

"max-concurrent-downloads": 10,

"max-concurrent-uploads": 10,

"bip": "172.17.0.1/16",

"registry-mirrors": ["https://docker.mirrors.ustc.edu.cn"],

"storage-driver": "overlay2",

"storage-opts": [

"overlay2.override_kernel_check=true"

],

"live-restore": true

}

# docker info |grep -i cgroup #有可能systemd不支持,无法启动,就需要改回cgroupfs

Cgroup Driver: systemd

5.2 重新加载docker

[root@master ~]# systemctl daemon-reload

[root@master ~]# systemctl restart docker

修改cgroupdriver是为了消除告警:

[WARNING IsDockerSystemdCheck]: detected "cgroupfs" as the Docker cgroup driver. The recommended driver is "systemd". Please follow the guide at https://kubernetes.io/docs/setup/cri/

9. 设置kubernetes源(在阿里源)

6.1 新增kubernetes源

[root@master ~]# cat <<EOF > /etc/yum.repos.d/kubernetes.repo

[kubernetes]

name=Kubernetes

baseurl=https://mirrors.aliyun.com/kubernetes/yum/repos/kubernetes-el7-x86_64/

enabled=1

gpgcheck=1

repo_gpgcheck=1

gpgkey=https://mirrors.aliyun.com/kubernetes/yum/doc/yum-key.gpg https://mirrors.aliyun.com/kubernetes/yum/doc/rpm-package-key.gpg

EOF

解释:

[] 中括号中的是repository id,唯一,用来标识不同仓库

name 仓库名称,自定义

baseurl 仓库地址

enable 是否启用该仓库,默认为1表示启用

gpgcheck 是否验证从该仓库获得程序包的合法性,1为验证

repo_gpgcheck 是否验证元数据的合法性 元数据就是程序包列表,1为验证

gpgkey=URL 数字签名的公钥文件所在位置,如果gpgcheck值为1,此处就需要指定gpgkey文件的位置,如果gpgcheck值为0就不需要此项了

6.2 更新缓存

[root@master ~]# yum clean all

[root@master ~]# yum -y makecache

三、Master节点安装->对master节点也需要docker

完整的官方文档可以参考:

https://kubernetes.io/docs/setup/independent/create-cluster-kubeadm/

https://kubernetes.io/docs/reference/setup-tools/kubeadm/kubeadm-init/

1. 版本查看

[root@master ~]# yum list kubelet --showduplicates | sort -r

[root@master ~]# yum list kubeadm --showduplicates | sort -r

[root@master ~]# yum list kubectl --showduplicates | sort -r

目前最新版是 1.16.0,该版本支持的docker版本为1.13.1, 17.03, 17.06, 17.09, 18.06, 18.09

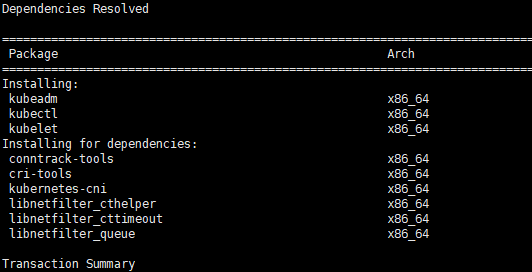

2. 安装指定版本kubelet、kubeadm和kubectl

官方安装文档可以参考:https://kubernetes.io/docs/setup/independent/install-kubeadm/

2.1 安装三个包

[root@master ~]# yum install -y kubelet-1.14.2 kubeadm-1.14.2 kubectl-1.14.2

若不指定版本直接运行 yum install -y kubelet kubeadm kubectl 则默认安装最新版

ps:由于官网未开放同步方式, 可能会有索引gpg检查失败的情况, 这时请用 yum install -y --nogpgcheck kubelet kubeadm kubectl 安装

Kubelet的安装文件:

[root@elk-node-1 ~]# rpm -ql kubelet

/etc/kubernetes/manifests #清单目录

/etc/sysconfig/kubelet #配置文件

/usr/bin/kubelet #主程序

/usr/lib/systemd/system/kubelet.service #unit文件

2.2 安装包说明

-

kubelet 运行在集群所有节点上,用于启动Pod和containers等对象的工具,维护容器的生命周期

-

kubeadm 安装K8S工具,用于初始化集群,启动集群的命令工具

-

kubectl K8S命令行工具,用于和集群通信的命令行,通过kubectl可以部署和管理应用,查看各种资源,创建、删除和更新各种组件

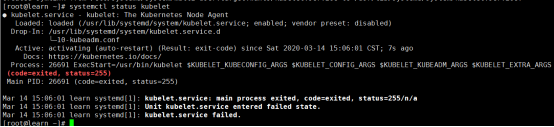

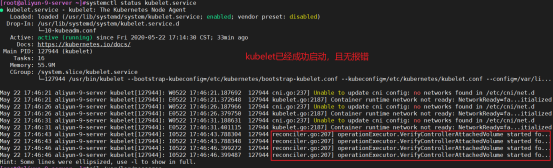

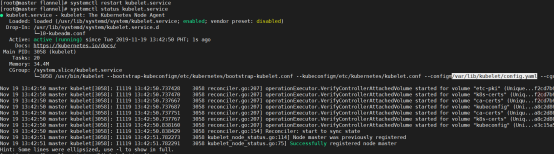

2.3 配置并启动kubelet

配置启动kubelet服务

(1)修改配置文件

[root@master ~]# vim /etc/sysconfig/kubelet

KUBELET_EXTRA_ARGS="--fail-swap-on=false"

#KUBE_PROXY=MODE=ipvs

echo 'KUBELET_EXTRA_ARGS="--cgroup-driver=systemd"' > /etc/sysconfig/kubelet

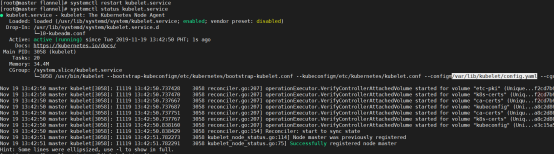

(2)启动kubelet并设置开机启动:

[root@master ~]# systemctl enable kubelet && systemctl start kubelet && systemctl enable --now kubelet

虽然启动失败,但是也得启动,否则集群初始化会卡死

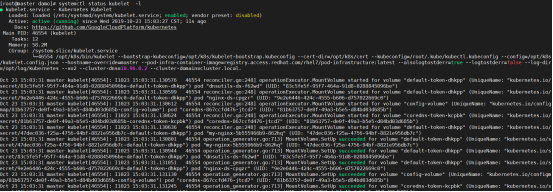

此时kubelet的状态,还是启动失败,通过journalctl -xeu kubelet能看到error信息;只有当执行了kubeadm init后才会启动成功。

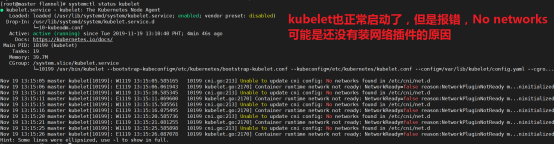

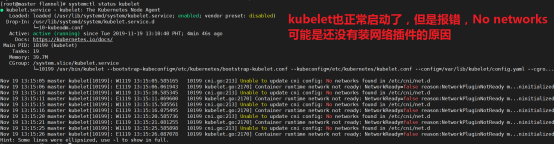

因为K8S集群还未初始化,所以kubelet 服务启动不成功,下面初始化完成,kubelet就会成功启动,但是还是会报错,因为没有部署flannel网络组件

搭建集群时首先保证正常kubelet运行和开机启动,只有kubelet运行才有后面的初始化集群和加入集群操作。

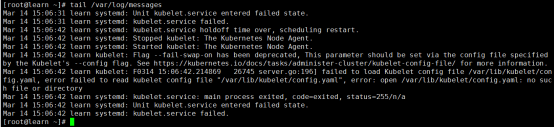

查找启动kubelet失败原因:查看启动状态

systemctl status kubelet

提示信息kubelet.service failed.

查看报错日志

tail /var/log/messages

2.4 kubectl命令补全

kubectl 主要是对pod、service、replicaset、deployment、statefulset、daemonset、job、cronjob、node资源的增删改查

# 安装kubectl自动补全命令包

[root@master ~]# yum install -y bash-completion

# source /usr/share/bash-completion/bash_completion

# source <(kubectl completion bash)

# 添加的当前shell

[root@master ~]# echo "source <(kubectl completion bash)" >> ~/.bash_profile

[root@master ~]# source ~/.bash_profile

# 查看kubectl的版本:

[root@master ~]# kubectl version

3. 下载镜像(建议采取脚本方式下载必须的镜像)

3.1 镜像下载的脚本

Kubernetes几乎所有的安装组件和Docker镜像都放在goolge自己的网站上,直接访问可能会有网络问题,这里的解决办法是从阿里云镜像仓库下载镜像,拉取到本地以后改回默认的镜像tag。

可以通过如下命令导出默认的初始化配置:

$ kubeadm config print init-defaults > kubeadm.yaml

如果将来出了新版本配置文件过时,则使用以下命令转换一下:更新kubeadm文件

# kubeadm config migrate --old-config kubeadm.yaml --new-config kubeadmnew.yaml

打开该文件查看,发现配置的镜像仓库如下:

imageRepository: k8s.gcr.io

在国内该镜像仓库是连不上,可以用国内的镜像代替:

imageRepository: registry.aliyuncs.com/google_containers

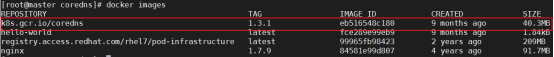

采用国内镜像的方案,由于coredns的标签问题,会导致拉取coredns:v1.3.1拉取失败,这时候我们可以手动拉取,并自己打标签。

打开init-config.yaml,然后进行相应的修改,可以指定kubernetesVersion版本,pod的选址访问等。

查看初始化集群时,需要拉的镜像名

kubeadm config images list

kubernetes镜像拉取命令:

kubeadm config images pull --config=kubeadm.yaml

=======================一般采取改方式下载镜像====================

# 或者用以下方式拉取镜像到本地

[root@master ~]# vim image.sh

#!/bin/bash

url=registry.cn-hangzhou.aliyuncs.com/google_containers

version=v1.14.2

images=(`kubeadm config images list --kubernetes-version=$version|awk -F '/' '{print $2}'`)

for imagename in ${images[@]} ; do

docker pull $url/$imagename

docker tag $url/$imagename k8s.gcr.io/$imagename

docker rmi -f $url/$imagename

done

#采用国内镜像的方案,由于coredns的标签问题,会导致拉取coredns:v1.3.1拉取失败,这时候我们可以手动拉取,并自己打标签。

# another image

# docker pull coredns/coredns:1.3.1 && \

docker tag k8s.gcr.io/coredns:latest k8s.gcr.io/coredns/coredns:v1.3.1

docker rmi k8s.gcr.io/coredns:latest

解释:url为阿里云镜像仓库地址,version为安装的kubernetes版本

3.2 下载镜像

运行脚本image.sh,下载指定版本的镜像

[root@master ~]# bash image.sh

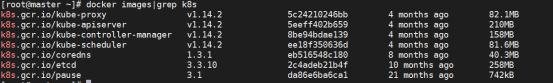

[root@master ~]# docker images|grep k8s

https://www.cnblogs.com/kazihuo/p/10184286.html

### k8s.gcr.io 地址替换

将k8s.gcr.io替换为

registry.cn-hangzhou.aliyuncs.com/google_containers

或者

registry.aliyuncs.com/google_containers

或者

mirrorgooglecontainers

### quay.io 地址替换

将 quay.io 替换为

quay.mirrors.ustc.edu.cn

### gcr.io 地址替换

将 gcr.io 替换为 registry.aliyuncs.com

====================也可以通过dockerhub先去搜索然后pull下来============

[root@master ~]# cat image.sh

#!/bin/bash

images=(kube-apiserver:v1.15.1 kube-controller-manager:v1.15.1 kube-scheduler:v1.15.1 kube-proxy:v1.15.1 pause:3.1 etcd:3.3.10)

for imageName in ${images[@]}

do

docker pull mirrorgooglecontainers/$imageName && \

docker tag mirrorgooglecontainers/$imageName k8s.gcr.io/$imageName &&\

docker rmi mirrorgooglecontainers/$imageName

done

#another image

docker pull coredns/coredns:1.3.1 && \

docker tag coredns/coredns:1.3.1 k8s.gcr.io/coredns:1.3.1

将这些镜像打包推到别的机器上去:

[root@master ~]# docker save -o kubeall.gz k8s.gcr.io/kube-apiserver:v1.15.1 k8s.gcr.io/kube-controller-manager:v1.15.1 k8s.gcr.io/kube-scheduler:v1.15.1 k8s.gcr.io/kube-proxy:v1.15.1 k8s.gcr.io/pause:3.1 k8s.gcr.io/etcd:3.3.10 k8s.gcr.io/coredns:1.3.1

4. 初始化Master

kubeadm init安装失败后需要重新执行,此时要先执行kubeadm reset命令。

kubeadm --help

kubeadm init --help

集群初始化如果遇到问题,可以使用kubeadm reset命令进行清理然后重新执行初始化,然后接下来在 master 节点配置 kubeadm 初始化文件,可以通过如下命令导出默认的

4.1 初始化

获取初始化配置并利用配置文件进行初始化:

$ kubeadm config print init-defaults > kubeadm.yaml

在master节点操作

kubeadm init --config kubeadm.yaml --upload-certs

建议用下面的方式初始化:

[root@master ~]# kubeadm init \

--apiserver-advertise-address=192.168.137.50 \

--kubernetes-version=v1.14.2 \

--apiserver-bind-port 6443 \

--pod-network-cidr=10.244.0.0/16 \

--service-cidr=10.96.0.0/12 \

--ignore-preflight-errors=Swap

--image-repository registry.aliyuncs.com/google_containers 这里不需要更换仓库地址,因为我们第三步的时候已经拉取的相关的镜像

kubeadm join 192.168.137.66:6443 --token fz80zd.n9hihtiiedy38dta \

--discovery-token-ca-cert-hash sha256:837e3c07125993cd1486cddc2dbd36799efb49af9dbb9f7fd2e31bf1bdd810ae

记录kubeadm join的输出,后面需要这个命令将各个节点加入集群中

(注意记录下初始化结果中的kubeadm join命令,部署worker节点时会用到)

[ERROR NumCPU]: the number of available CPUs 1 is less than the required 2

解释:

--apiserver-advertise-address:指明用 Master 的哪个 interface 与 Cluster 的其他节点通信。如果 Master 有多个 interface,建议明确指定,如果不指定,kubeadm 会自动选择有默认网关的 interface

--apiserver-bind-port 6443 :apiserver端口

--kubernetes-version:指定kubeadm版本;我这里下载的时候kubeadm最高时1.14.2版本 --kubernetes-version=v1.14.2。关闭版本探测,因为它的默认值是stable-1,会导致从https://dl.k8s.io/release/stable-1.txt下载最新的版本号,我们可以将其指定为固定版本(最新版:v1.13.1)来跳过网络请求

--pod-network-cidr:指定Pod网络的范围,这里使用flannel(10.244.0.0/16)网络方案;Kubernetes 支持多种网络方案,而且不同网络方案对 --pod-network-cidr 有自己的要求,这里设置为 10.244.0.0/16 是因为我们将使用 flannel 网络方案,必须设置成这个 CIDR

--service-cidr:指定service网段

--image-repository:Kubenetes默认Registries地址是 k8s.gcr.io,在国内并不能访问gcr.io,在1.13版本中我们可以增加--image-repository参数,默认值是 k8s.gcr.io,将其指定为阿里云镜像地址:registry.aliyuncs.com/google_containers

--ignore-preflight-errors=Swap/all:忽略 swap/所有 报错

--ignore-preflight-errors=NumCPU #如果您知道自己在做什么,可以使用'--ignore-preflight-errors'进行非致命检查

--ignore-preflight-errors=Mem

--config string 通过文件来初始化k8s。 Path to a kubeadm configuration file.

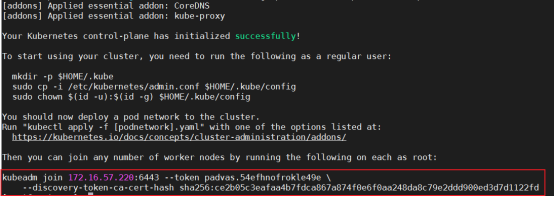

初始化过程说明:

1.[init] Using Kubernetes version: v1.22.4

2.[preflight] kubeadm 执行初始化前的检查。

3.[certs] 生成相关的各种token和证书

4.[kubeconfig] 生成 KubeConfig 文件,kubelet 需要这个文件与 Master 通信

5.[kubelet-start] 生成kubelet的配置文件”/var/lib/kubelet/config.yaml”

6.[control-plane] 安装 Master 组件,如果本地没有相关镜像,那么会从指定的 Registry 下载组件的 Docker 镜像。

7.[bootstraptoken] 生成token记录下来,后边使用kubeadm join往集群中添加节点时会用到

8.[addons] 安装附加组件 kube-proxy 和 coredns。

9.Kubernetes Master 初始化成功,提示如何配置常规用户使用kubectl访问集群。

Your Kubernetes control-plane has initialized successfully!

To start using your cluster, you need to run the following as a regular user:

mkdir -p $HOME/.kube

sudo cp -i /etc/kubernetes/admin.conf $HOME/.kube/config

sudo chown $(id -u):$(id -g) $HOME/.kube/config

Alternatively, if you are the root user, you can run:

export KUBECONFIG=/etc/kubernetes/admin.conf

10.提示如何安装 Pod 网络。

You should now deploy a pod network to the cluster.

Run "kubectl apply -f [podnetwork].yaml" with one of the options listed at:

https://kubernetes.io/docs/concepts/cluster-administration/addons/

11.提示如何注册其他节点到 Cluster

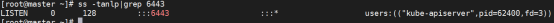

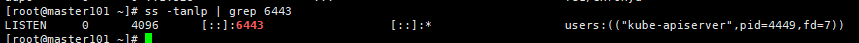

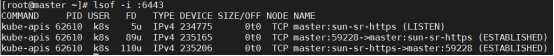

开启了kube-apiserver 的6443端口:

[root@master ~]# ss -tanlp|grep 6443

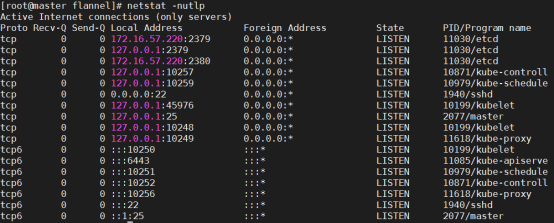

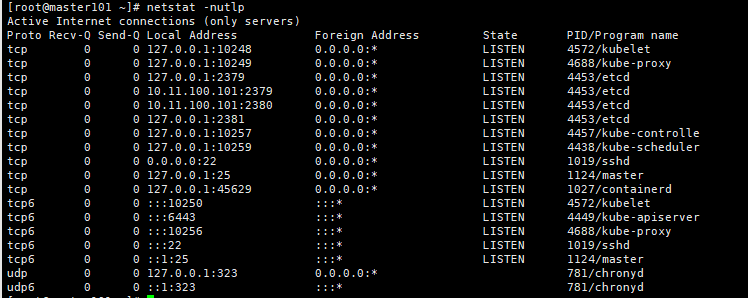

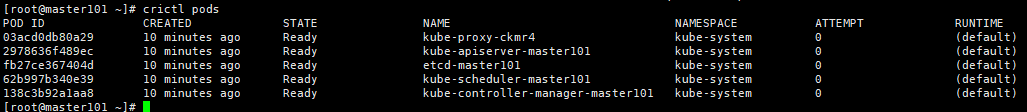

各个服务的端口:master初始化过后各个服务就正常启动了

查看docker运行了那些服务了:

# systemctl status kubelet.service

是没有装flannel的原因,装了重启kubelet就正常了:

# ll /etc/kubernetes/#生成了各个组件的配置文件

total 36

-rw------- 1 root root 5453 Nov 19 13:10 admin.conf

-rw------- 1 root root 5485 Nov 19 13:10 controller-manager.conf

-rw------- 1 root root 5461 Nov 19 13:10 kubelet.conf

drwxr-xr-x 2 root root 113 Nov 19 13:10 manifests

drwxr-xr-x 3 root root 4096 Nov 19 13:10 pki

-rw------- 1 root root 5437 Nov 19 13:10 scheduler.conf

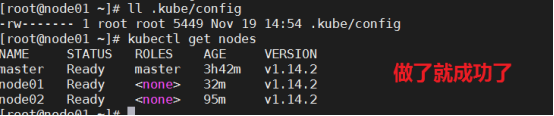

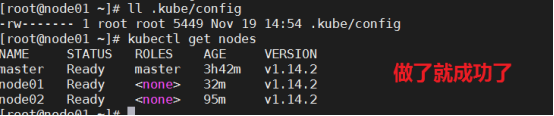

5. 配置kubectl

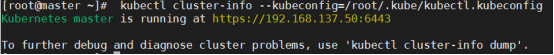

4.1 配置 kubectl->加载环境变量

kubectl 是管理 Kubernetes Cluster 的命令行工具, Master 初始化完成后需要做一些配置工作才能使用kubectl,这里直接配置root用户:(实际操作时只配置root用户,部署flannel会报错,最后也把node节点用户也弄上)

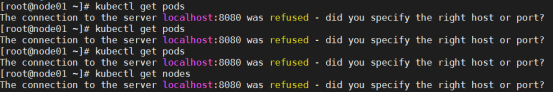

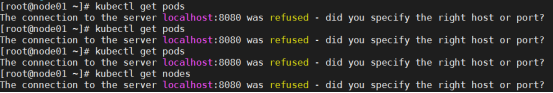

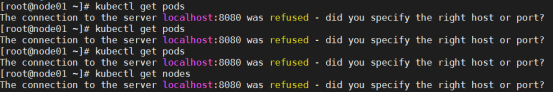

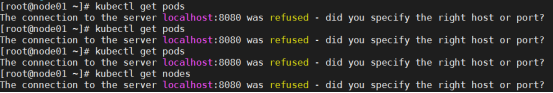

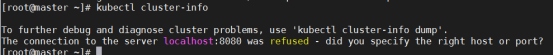

如果k8s服务端提示The connection to the server localhost:8080 was refused - did you specify the right host or port?出现这个问题的原因是kubectl命令需要使用kubernetes-admin来运行

[root@master ~]# echo "export KUBECONFIG=/etc/kubernetes/admin.conf" >> ~/.bash_profile

[root@master ~]# source ~/.bash_profile

4.2 普通用户可以参考 kubeadm init 最后提示:

复制admin.conf并修改权限,否则部署flannel网络插件报下面错误

mkdir -p $HOME/.kube

cp -i /etc/kubernetes/admin.conf $HOME/.kube/config

chown $(id -u):$(id -g) $HOME/.kube/config

4.3 node节点支持kubelet:

如果不做这个操作:node操作集群也会报错如下

scp /etc/kubernetes/admin.conf node1:/etc/kubernetes/admin.conf

scp /etc/kubernetes/admin.conf node2:/etc/kubernetes/admin.conf

echo "export KUBECONFIG=/etc/kubernetes/admin.conf" >> ~/.bash_profile

source ~/.bash_profile

Kubernetes 集群默认需要加密方式访问,以上操作就是将刚刚部署生成的 Kubernetes 集群的安全配置文件保存到当前用户的.kube目录下,kubectl默认会使用这个目录下的授权信息访问 Kubernetes 集群。

如果不这么做的话,我们每次都需要通过 export KUBECONFIG 环境变量告诉 kubectl 这个安全配置文件的位置

最后就可以使用kubctl命令了:

[root@master ~]# kubectl get nodes #NotReady 是因为还没有安装网络插件

NAME STATUS ROLES AGE VERSION

master NotReady control-plane,master 37m v1.22.4

[root@master ~]# kubectl get pod -A #-A查看 所以pod

NAMESPACE NAME READY STATUS RESTARTS AGE

kube-system coredns-78fcd69978-78kn4 0/1 Pending 0 33m

kube-system coredns-78fcd69978-nkcx5 0/1 Pending 0 33m

kube-system etcd-master 1/1 Running 0 33m

kube-system kube-apiserver-master 1/1 Running 0 33m

kube-system kube-controller-manager-master 1/1 Running 1 33m

kube-system kube-proxy-bv42k 1/1 Running 0 33m

kube-system kube-scheduler-master 1/1 Running 1 33m

如果pod处于失败状态,那么不能用kubectl logs -n kube-system coredns-78fcd69978-78kn4 来查看日志。只能用 kubectl logs -n kube-system coredns-78fcd69978-78kn4 来看错误信息

这里没有coredns启动失败是因为还没有部署网络插件

[root@master ~]# kubectl get ns #查看命令空间

NAME STATUS AGE

default Active 37m

kube-node-lease Active 37m

kube-public Active 37m

kube-system Active 37m

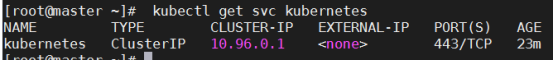

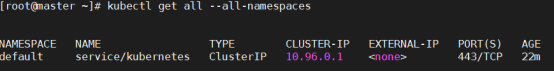

[root@master ~]# kubectl get svc #切记不要删除这个svc,这是集群最基本的配置

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

kubernetes ClusterIP 10.96.0.1 <none> 443/TCP 47m

10.96.0.1 这个地址就是初始化集群时指定的--service-cidr=10.96.0.0/12 ,进行分配的地址

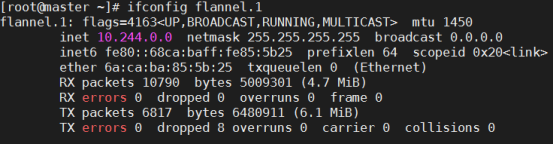

6. 安装pod网络->就是flannel网络插件

【注意】:正常在生产环境不能这么搞,flannel一删除,所有Pod都不能运行了,因为没有网络。系统刚装完就要去调整flannel

Deploying flannel manually

文档地址:https://github.com/coreos/flannel

要让 Kubernetes Cluster 能够工作,必须安装 Pod 网络,否则 Pod 之间无法通信。

Kubernetes 支持多种网络方案,这里我们使用 flannel

Pod正确运行,并且默认会分配10.244.开头的集群IP

如果kubernetes是新版本,那么flannel也可以直接用新版。否则需要找一下对于版本

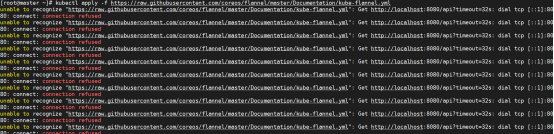

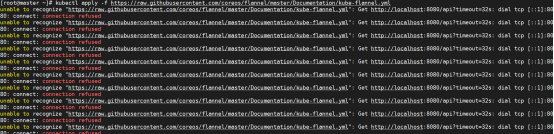

[root@master ~]# kubectl apply -f https://raw.githubusercontent.com/coreos/flannel/master/Documentation/kube-flannel.yml

Warning: policy/v1beta1 PodSecurityPolicy is deprecated in v1.21+, unavailable in v1.25+

podsecuritypolicy.policy/psp.flannel.unprivileged created

clusterrole.rbac.authorization.k8s.io/flannel created

clusterrolebinding.rbac.authorization.k8s.io/flannel created

serviceaccount/flannel created

configmap/kube-flannel-cfg created

daemonset.apps/kube-flannel-ds created

或者直接下载阿里云的吧(但是新版本apply报错):

https://github.com/coreos/flannel/raw/master/Documentation/kube-flannel-aliyun.yml

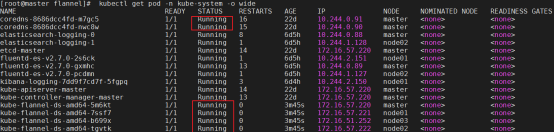

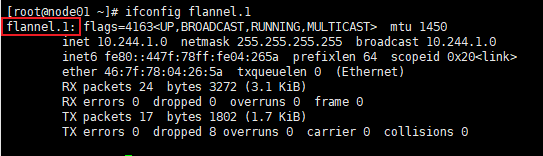

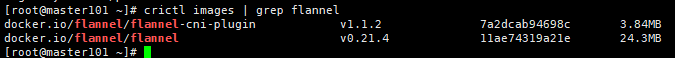

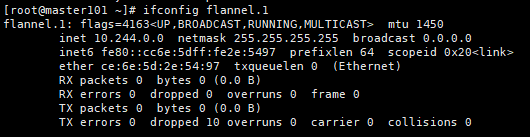

(2)看到下载好的flannel 的镜像

[root@master ~]# docker image ls |grep flannel

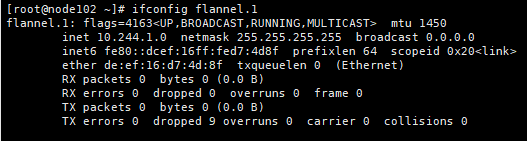

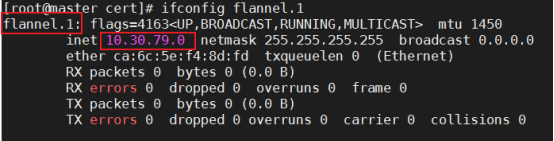

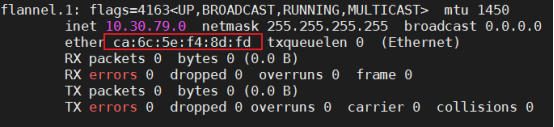

[root@master ~]# ifconfig flannel.1

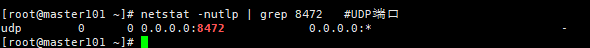

# netstat -nutlp|grep 8472 #UDP端口

(3)验证

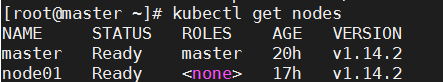

① master节点已经Ready #安装了flannel后,master就Ready

[root@master ~]# kubectl get nodes

NAME STATUS ROLES AGE VERSION

master Ready master 42m v1.14.2

发现主节点在notready状态,因为还没安装网络插件,列如:funnel

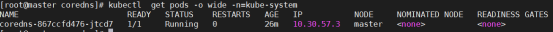

② 查询kube-system名称空间下

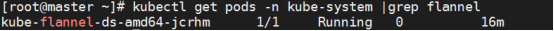

语法:kubectl get pods -n kube-system(指定名称空间) |grep flannel

[root@master ~]# kubectl get pods -n kube-system |grep flannel

kube-flannel-ds-amd64-jcrhm 1/1 Running 0 16m

[root@master ~]# kubectl logs -n kube-system kube-flannel-ds-amd64-jcrhm #查看日志

可以看到,所有的系统 Pod 都成功启动了,而刚刚部署的flannel网络插件则在 kube-system 下面新建了一个名叫kube-flannel-ds-amd64-jcrhm的 Pod,一般来说,这些 Pod就是容器网络插件在每个节点上的控制组件。

Kubernetes 支持容器网络插件,使用的是一个名叫 CNI 的通用接口,它也是当前容器网络的事实标准,市面上的所有容器网络开源项目都可以通过 CNI 接入 Kubernetes,比如 Flannel、Calico、Canal、Romana 等等,它们的部署方式也都是类似的"一键部署"

如果pod提示Init:ImagePullBackOff,说明这个pod的镜像在对应节点上拉取失败,我们可以通过 kubectl describe pod pod_name 查看 Pod 具体情况,以确认拉取失败的镜像

[root@master ~]# kubectl describe pod kube-flannel-ds-amd64-jcrhm --namespace=kube-system

可能无法从 quay.io/coreos/flannel:v0.10.0-amd64 下载镜像,可以从阿里云或者dockerhub镜像仓库下载,然后改回kube-flannel.yml文件里对应的tag即可:

docker pull registry.cn-hangzhou.aliyuncs.com/kubernetes_containers/flannel:v0.10.0-amd64

docker tag registry.cn-hangzhou.aliyuncs.com/kubernetes_containers/flannel:v0.10.0-amd64 quay.io/coreos/flannel:v0.10.0-amd64

docker rmi registry.cn-hangzhou.aliyuncs.com/kubernetes_containers/flannel:v0.10.0-amd64

7. 使用kubectl命令查询集群信息

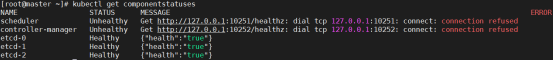

查询组件状态信息:确认各个组件都处于healthy状态

[root@master ~]# kubectl get cs || kubectl get componentstatus #查看组件状态

NAME STATUS MESSAGE ERROR

scheduler Healthy ok

controller-manager Healthy ok

etcd-0 Healthy {"health":"true"}

为什么没有apiservice呢? ->你能查到信息,说明apiservice以及运行成功了

查询集群节点信息(如果还没有部署好flannel,所以节点显示为NotReady):

[root@master ~]# kubectl get nodes || kubectl get nodes -o wide

NAME STATUS ROLES AGE VERSION

master Ready master 107m v1.14.2

# kubectl get node -o wide #详细信息

# kubectl describe node master #更加详细信息,通过 kubectl describe 指令的输出,我们可以看到 Ready 的原因在于,我们已经部署了网络插件

[root@master ~]# kubectl get pod -A

NAMESPACE NAME READY STATUS RESTARTS AGE

kube-system coredns-78fcd69978-78kn4 1/1 Running 0 96m

kube-system coredns-78fcd69978-nkcx5 1/1 Running 0 96m

kube-system etcd-master 1/1 Running 0 96m

kube-system kube-apiserver-master 1/1 Running 0 96m

kube-system kube-controller-manager-master 1/1 Running 1 96m

kube-system kube-flannel-ds-4xz74 1/1 Running 0 13m

kube-system kube-proxy-bv42k 1/1 Running 0 96m

kube-system kube-scheduler-master 1/1 Running 1 96m

coredns已经成功启动

查询名称空间,默认:

[root@master ~]# kubectl get ns

NAME STATUS AGE

default Active 107m

kube-node-lease Active 107m

kube-public Active 107m

kube-system Active 107m

我们还可以通过 kubectl 检查这个节点上各个系统 Pod 的状态,其中,kube-system 是 Kubernetes 项目预留的系统 Pod 的工作空间(Namepsace,注意它并不是 Linux Namespace,它只是 Kubernetes 划分不同工作空间的单位)

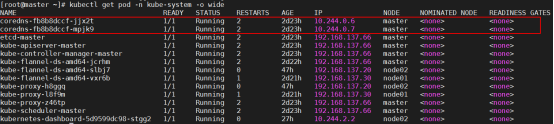

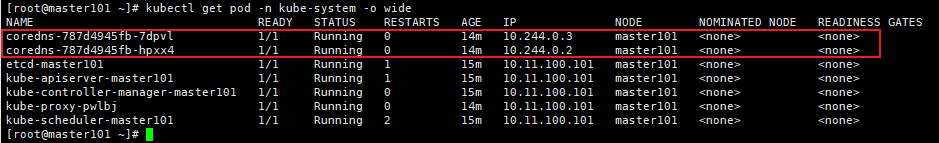

[root@master ~]# kubectl get pod -n kube-system -o wide

如果,CoreDNS依赖于网络的 Pod 都处于 Pending 状态,即调度失败。因为这个 Master 节点的网络尚未就绪,这里我们已经部署了网络了,所以是Running

注:

因为kubeadm需要拉取必要的镜像,这些镜像需要"科学上网";所以可以先在docker hub或其他镜像仓库拉取kube-proxy、kube-scheduler、kube-apiserver、kube-controller-manager、etcd、pause、coredns、flannel镜像;并加上 --ignore-preflight-errors=all 忽略所有报错即可

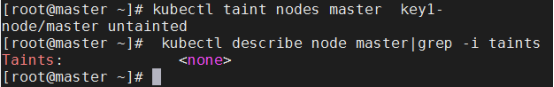

8. master节点配置(污点)

出于安全考虑,默认配置下Kubernetes不会将Pod调度到Master节点。taint:污点的意思。如果一个节点被打上了污点,那么pod是不允许运行在这个节点上面的

6.1 删除master节点默认污点

默认情况下集群不会在master上调度pod,如果偏想在master上调度Pod,可以执行如下操作:

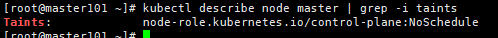

查看污点(Taints)字段默认配置:

[root@master ~]# kubectl describe node master | grep -i taints

Taints: node-role.kubernetes.io/master:NoSchedule

删除默认污点:

[root@master ~]# kubectl taint nodes master node-role.kubernetes.io/master-

node/master untainted

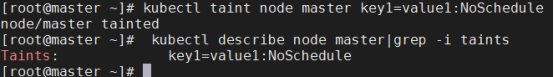

6.2 污点机制

语法:

语法:

kubectl taint node [node_name] key_name=value_name[effect]

其中[effect] 可取值: [ NoSchedule | PreferNoSchedule | NoExecute ]

NoSchedule: 一定不能被调度

PreferNoSchedule: 尽量不要调度

NoExecute: 不仅不会调度, 还会驱逐Node上已有的Pod

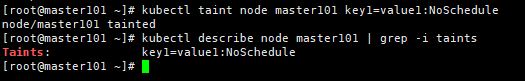

打污点:

[root@master ~]# kubectl taint node master key1=value1:NoSchedule

[root@master ~]# kubectl describe node master|grep -i taints

Taints: key1=value1:NoSchedule

key为key1,value为value1(value可以为空),effect为NoSchedule表示一定不能被调度

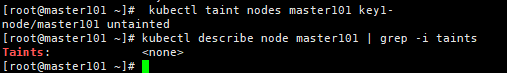

删除污点:

[root@master ~]# kubectl taint nodes master key1-

[root@master ~]# kubectl describe node master|grep -i taints

Taints: <none>

删除指定key所有的effect,'-'表示移除所有以key1为键的污点

四、Node节点安装

Kubernetes 的 Worker 节点跟 Master 节点几乎是相同的,它们运行着的都是一个 kubelet 组件。唯一的区别在于,在 kubeadm init 的过程中,kubelet 启动后,Master 节点上还会自动运行 kube-apiserver、kube-scheduler、kube-controller-manger这三个系统 Pod

1. 安装kubelet、kubeadm和kubectl

同master节点一样操作:在node节点安装kubeadm、kubelet、kubectl

yum install -y kubelet-1.14.2 kubeadm-1.14.2 kubectl-1.14.2

systemctl enable kubelet

systemctl start kubelet

说明:其实可以不安装kubectl,因为你如果不在node上操作,就可以不用安装

2. 下载镜像

同master节点一样操作,同时node上面也需要flannel镜像

拉镜像和打tag,以下三个镜像是node节点运行起来的必要镜像(pause、kube-proxy、kube-flannel(如果本地没有镜像,在加入集群的时候自动拉取镜像然后启动))

[root@node01 ~]# docker pull mirrorgooglecontainers/pause:3.1

[root@node01 ~]# docker pull mirrorgooglecontainers/kube-proxy:v1.14.0

[root@node01 ~]# docker pull quay.io/coreos/flannel:v0.11.0-amd64

打上标签:

[root@elk-node-1 ~]# docker tag mirrorgooglecontainers/kube-proxy:v1.14.0 k8s.gcr.io/kube-proxy:v1.14.0

[root@elk-node-1 ~]# docker tag mirrorgooglecontainers/pause:3.1 k8s.gcr.io/pause:3.1

[root@elk-node-1 ~]# docker tag mirrorgooglecontainers/flannel:v0.11.0-amd64 quay.io/coreos/flannel:v0.11.0-amd64

3. 加入集群

3.1-3.3在master上执行

3.1 查看令牌

[root@master ~]# kubeadm token list

TOKEN TTL EXPIRES USAGES DESCRIPTION EXTRA GROUPS

fz80zd.n9hihtiiedy38dta 22h 2019-10-01T16:02:44+08:00 authentication,signing The default bootstrap token generated by 'kubeadm init'. system:bootstrappers:kubeadm:default-node-token

如果发现之前初始化时的令牌已过期,就在生成令牌

3.2 生成新的令牌

[root@master ~]# kubeadm token create

f4e26l.xxox4o5gxuj3l6ud

或者:kubeadm token create --print-join-command

3.3 生成新的加密串,计算出token的hash值

[root@master ~]# openssl x509 -pubkey -in /etc/kubernetes/pki/ca.crt | openssl rsa -pubin -outform der 2>/dev/null | \

openssl dgst -sha256 -hex | sed 's/^.* //'

837e3c07125993cd1486cddc2dbd36799efb49af9dbb9f7fd2e31bf1bdd810ae

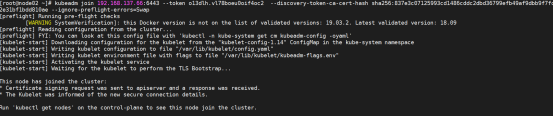

3.4 node节点加入集群 ->加入集群就相当于初始化node节点了

在node节点上分别执行如下操作:

语法:

kubeadm join master_ip:6443 --token token_ID --discovery-token-ca-cert-hash sha256:生成的加密串

[root@node01 ~]# kubeadm join 192.168.137.66:6443 \

--token fz80zd.n9hihtiiedy38dta \

--discovery-token-ca-cert-hash sha256:837e3c07125993cd1486cddc2dbd36799efb49af9dbb9f7fd2e31bf1bdd810ae \

--ignore-preflight-errors=Swap

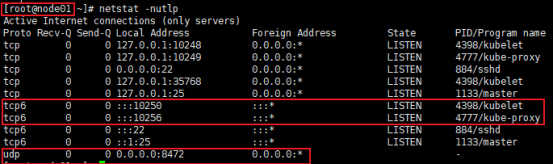

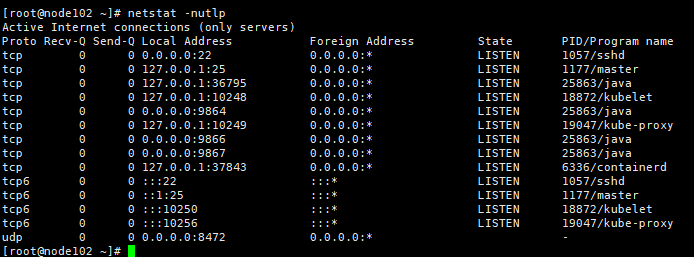

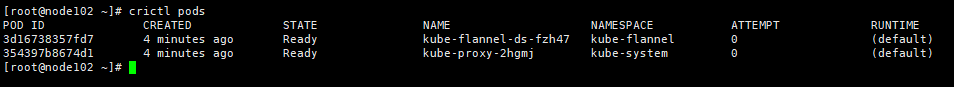

node节点加入集群启动的服务:(加入集群成功后自动成功启动了kubelet)

master上面查看node01已经加入集群了

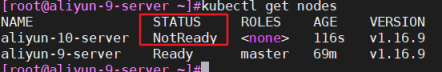

这是部署了网络组件的,STATUS才为Ready;没有安装网络组件状态如下:

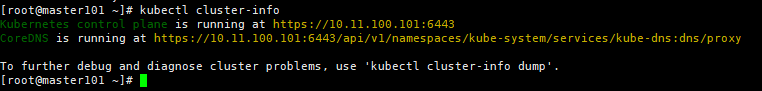

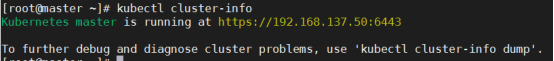

[root@master ~]# kubectl cluster-info

Kubernetes control plane is running at https://192.168.137.66:6443

CoreDNS is running at https://192.168.137.66:6443/api/v1/namespaces/kube-system/services/kube-dns:dns/proxy

To further debug and diagnose cluster problems, use 'kubectl cluster-info dump'.

[root@master ~]# kubectl get nodes,cs,ns,pods -A

NAME STATUS ROLES AGE VERSION

node/master Ready control-plane,master 5h7m v1.22.4

node/node01 Ready <none> 19m v1.22.4

NAME STATUS MESSAGE ERROR

componentstatus/scheduler Healthy ok

componentstatus/controller-manager Healthy ok

componentstatus/etcd-0 Healthy {"health":"true","reason":""}

NAME STATUS AGE

namespace/default Active 5h7m

namespace/kube-node-lease Active 5h7m

namespace/kube-public Active 5h7m

namespace/kube-system Active 5h7m

NAMESPACE NAME READY STATUS RESTARTS AGE

kube-system pod/coredns-78fcd69978-78kn4 1/1 Running 0 5h7m

kube-system pod/coredns-78fcd69978-nkcx5 1/1 Running 0 5h7m

kube-system pod/etcd-master 1/1 Running 0 5h7m

kube-system pod/kube-apiserver-master 1/1 Running 0 5h7m

kube-system pod/kube-controller-manager-master 1/1 Running 0 3h19m

kube-system pod/kube-flannel-ds-4xz74 1/1 Running 0 3h44m

kube-system pod/kube-flannel-ds-wfcpz 1/1 Running 0 19m

kube-system pod/kube-proxy-bv42k 1/1 Running 0 5h7m

kube-system pod/kube-proxy-vzlbk 1/1 Running 0 19m

kube-system pod/kube-scheduler-master 1/1 Running 0 3h19m

加入一个node节点的已完成,以上是所以启动的服务(没有部署其他服务哈)

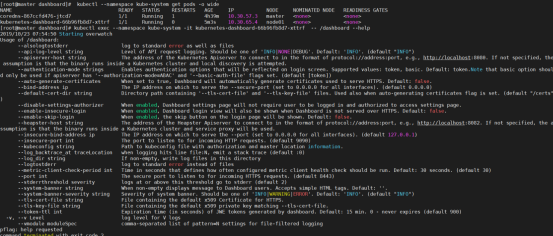

五、Dashboard(部署dashboard v1.10.1版本)

官方文件目录:

https://github.com/kubernetes/kubernetes/tree/master/cluster/addons/dashboard

https://github.com/kubernetes/dashboard

三方参考文档:

https://blog.csdn.net/networken/article/details/85607593

在 Kubernetes 社区中,有一个很受欢迎的 Dashboard 项目,它可以给用户提供一个可视化的 Web 界面来查看当前集群的各种信息。

用户可以用 Kubernetes Dashboard部署容器化的应用、监控应用的状态、执行故障排查任务以及管理 Kubernetes 各种资源

1. 下载yaml

由于yaml配置文件中指定镜像从google拉取,网络访问不通,先下载yaml文件到本地,修改配置从阿里云仓库拉取镜像

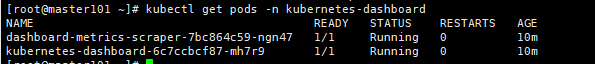

dashboard v2.4.0资源有相应的改动,部署在kubernetes-dashboard命名空间,一切参考官网

[root@master ~]# wget https://raw.githubusercontent.com/kubernetes/dashboard/v1.10.1/src/deploy/recommended/kubernetes-dashboard.yaml

2. 配置yaml

1、如果国内无法拉取镜像,那么需要修改为阿里云镜像地址

[root@master ~]# cat kubernetes-dashboard.yaml

…………

containers:

- name: kubernetes-dashboard

# image: k8s.gcr.io/kubernetes-dashboard-amd64:v1.10.1

image: registry.cn-hangzhou.aliyuncs.com/google_containers/kubernetes-dashboard-amd64:v1.10.1

ports:

…………

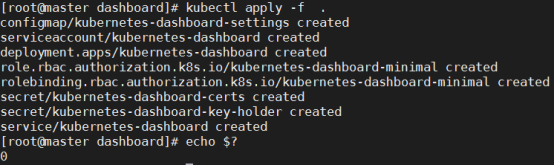

3. 部署dashboard服务

部署2种命令:

[root@master ~]# kubectl create -f kubernetes-dashboard.yaml

secret/kubernetes-dashboard-certs created

serviceaccount/kubernetes-dashboard created

role.rbac.authorization.k8s.io/kubernetes-dashboard-minimal created

rolebinding.rbac.authorization.k8s.io/kubernetes-dashboard-minimal created

deployment.apps/kubernetes-dashboard created

service/kubernetes-dashboard created

或者使用apply部署# kubectl apply -f kubernetes-dashboard.yaml

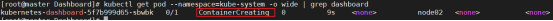

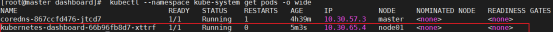

状态查看:

查看Pod 的状态为running说明dashboard已经部署成功

[root@master ~]# kubectl get pod --namespace=kube-system -o wide | grep dashboard

[root@master ~]# kubectl get pods -n kube-system -o wide|grep dashboard

Dashboard 会在 kube-system namespace 中创建自己的 Deployment 和 Service:

[root@master ~]# kubectl get deployment kubernetes-dashboard --namespace=kube-system

NAME READY UP-TO-DATE AVAILABLE AGE

kubernetes-dashboard 1/1 1 1 2m59s

[root@master ~]# kubectl get deployment kubernetes-dashboard -n kube-system

NAME READY UP-TO-DATE AVAILABLE AGE

kubernetes-dashboard 1/1 1 1 91m

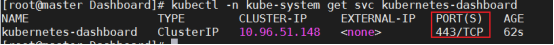

获取dashboard的service访问端口:

[root@master ~]# kubectl get services -n kube-system

[root@master ~]# kubectl get service kubernetes-dashboard --namespace=kube-system

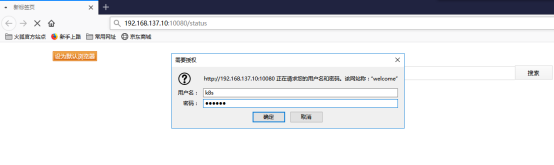

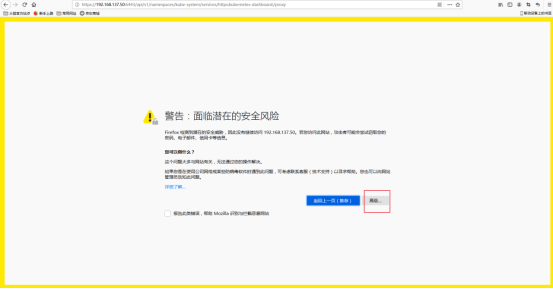

4. 访问dashboard

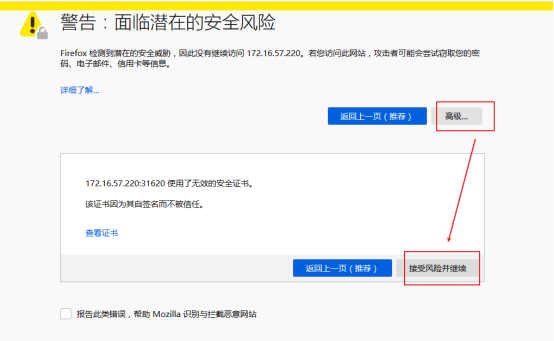

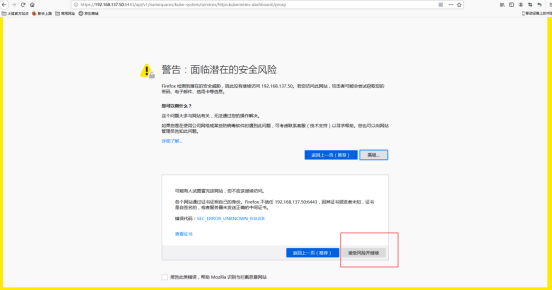

注意:要用火狐浏览器打开,其他浏览器打不开的!

官方参考文档:https://kubernetes.io/docs/tasks/access-application-cluster/web-ui-dashboard/#accessing-the-dashboard-ui

有以下几种方式访问dashboard:

有以下几种方式访问dashboard:

Nodport方式访问dashboard,service类型改为NodePort

loadbalacer方式,service类型改为loadbalacer

Ingress方式访问dashboard

API server方式访问 dashboard

kubectl proxy方式访问dashboard

NodePort方式

只建议在开发环境,单节点的安装方式中使用

为了便于本地访问,修改yaml文件,将service改为NodePort 类型:

配置NodePort,外部通过https://NodeIp:NodePort 访问Dashboard,此时端口为31620

[root@master ~]# cat kubernetes-dashboard.yaml

…………

---

# ------------------- Dashboard Service ------------------- #

kind: Service

apiVersion: v1

metadata:

labels:

k8s-app: kubernetes-dashboard

name: kubernetes-dashboard

namespace: kube-system

spec:

type: NodePort #增加type: NodePort

ports:

- port: 443

targetPort: 8443

nodePort: 31620 #增加nodePort: 31620

selector:

k8s-app: kubernetes-dashboard

重新应用yaml文件:

[root@master ~]# kubectl apply -f kubernetes-dashboard.yaml

secret/kubernetes-dashboard-certs unchanged

serviceaccount/kubernetes-dashboard unchanged

role.rbac.authorization.k8s.io/kubernetes-dashboard-minimal unchanged

rolebinding.rbac.authorization.k8s.io/kubernetes-dashboard-minimal unchanged

deployment.apps/kubernetes-dashboard unchanged

service/kubernetes-dashboard configured

查看service,TYPE类型已经变为NodePort,端口为31620:

#这是已经用NodePort暴露了端口,默认文件是cluster-ip方式

[root@master ~]# kubectl -n kube-system get svc kubernetes-dashboard

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

kubernetes-dashboard NodePort 10.111.214.172 <none> 443:31620/TCP 26h

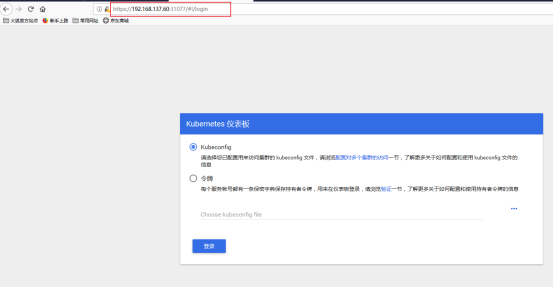

通过浏览器访问:https://192.168.137.66:31620/ 登录界面如下:

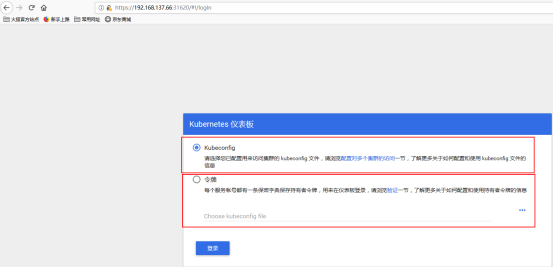

Dashboard 支持 Kubeconfig 和 Token 两种认证方式,我们这里选择Token认证方式登录:

创建登录用户: 官方参考文档:https://github.com/kubernetes/dashboard/wiki/Creating-sample-user 创建dashboard-adminuser.yaml:

[root@master ~]#vim dashboard-adminuser.yaml

apiVersion: v1

kind: ServiceAccount

metadata:

name: admin-user

namespace: kube-system

---

apiVersion: rbac.authorization.k8s.io/v1

kind: ClusterRoleBinding

metadata:

name: admin-user

roleRef:

apiGroup: rbac.authorization.k8s.io

kind: ClusterRole

name: cluster-admin

subjects:

- kind: ServiceAccount

name: admin-user

namespace: kube-system

说明:上面创建了一个叫admin-user的服务账号,并放在kube-system命名空间下,并将cluster-admin角色绑定到admin-user账户,这样admin-user账户就有了管理员的权限。默认情况下,kubeadm创建集群时已经创建了cluster-admin角色,我们直接绑定即可

执行yaml文件:

[root@master ~]# kubectl apply -f dashboard-adminuser.yaml

serviceaccount/admin-user created

clusterrolebinding.rbac.authorization.k8s.io/admin-user created

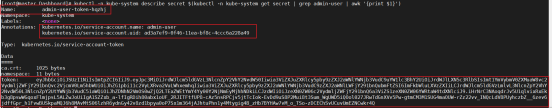

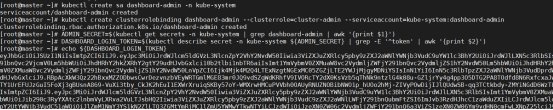

查看admin-user账户的token(令牌):

[root@master ~]# kubectl describe secrets -n kube-system admin-user

[root@master ~]# kubectl -n kube-system describe secret $(kubectl -n kube-system get secret | grep admin-user | awk '{print $1}')

把获取到的Token复制到登录界面的Token输入框中:

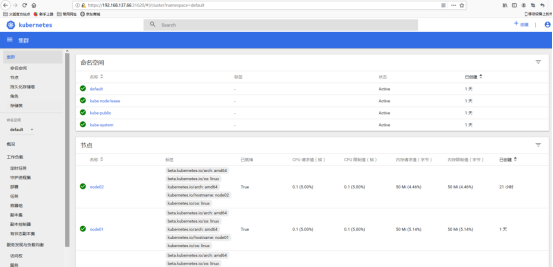

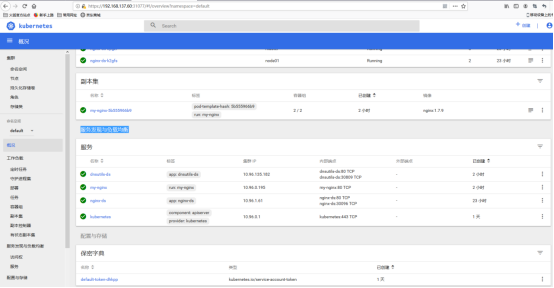

成功登陆dashboard:

--------------------------------------------------------------------------

或者直接在kubernetes-dashboard.yaml文件中追加

创建超级管理员的账号用于登录Dashboard

cat >> kubernetes-dashboard.yaml << EOF

---# ------------------- dashboard-admin ------------------- #

apiVersion: v1

kind: ServiceAccount

metadata:

name: dashboard-admin

namespace: kube-system

---

apiVersion: rbac.authorization.k8s.io/v1beta1

kind: ClusterRoleBinding

metadata:

name: dashboard-admin

subjects:

- kind: ServiceAccount

name: dashboard-admin

namespace: kube-system

roleRef:

apiGroup: rbac.authorization.k8s.io

kind: ClusterRole

name: cluster-admin

EOF

部署访问:

部署Dashboard

[root@master ~]# kubectl apply -f kubernetes-dashboard.yaml

状态查看:

[root@master ~]# kubectl get deployment kubernetes-dashboard -n kube-system

[root@master ~]# kubectl get pods -n kube-system -o wide

[root@master ~]# kubectl get services -n kube-system

令牌查看:

[root@master ~]# kubectl describe secrets -n kube-system dashboard-admin

访问

loadbalacer方式

首先需要部署metallb负载均衡器,部署参考:

https://blog.csdn.net/networken/article/details/85928369

LoadBalancer 更适合结合云提供商的 LB 来使用,但是在 LB 越来越多的情况下对成本的花费也是不可小觑

Ingress方式

详细部署参考:

https://blog.csdn.net/networken/article/details/85881558

https://www.kubernetes.org.cn/1885.html

github地址:

https://github.com/kubernetes/ingress-nginx

https://kubernetes.github.io/ingress-nginx/

基于 Nginx 的 Ingress Controller 有两种:

一种是 k8s 社区提供的: https://github.com/nginxinc/kubernetes-ingress

另一种是 Nginx 社区提供的 :

https://kubernetes.io/docs/concepts/services-networking/ingress/

关于两者的区别见:

https://github.com/nginxinc/kubernetes-ingress/blob/master/docs/nginx-ingress-controllers.md

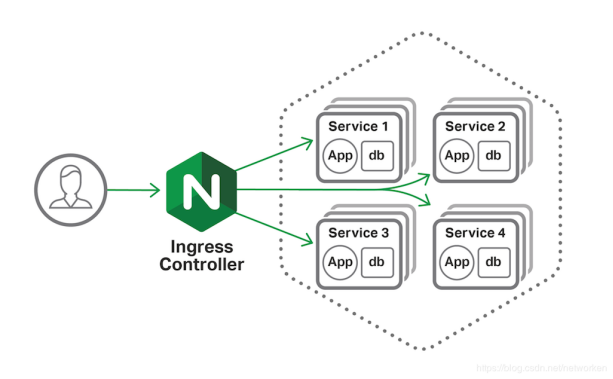

Ingress-nginx简介

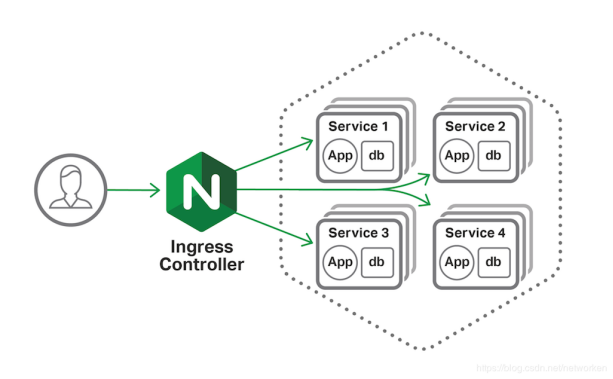

Ingress 是 k8s 官方提供的用于对外暴露服务的方式,也是在生产环境用的比较多的方式,一般在云环境下是 LB + Ingress Ctroller 方式对外提供服务,这样就可以在一个 LB 的情况下根据域名路由到对应后端的 Service,有点类似于 Nginx 反向代理,只不过在 k8s 集群中,这个反向代理是集群外部流量的统一入口

Pod的IP以及service IP只能在集群内访问,如果想在集群外访问kubernetes提供的服务,可以使用nodeport、proxy、loadbalacer以及ingress等方式,由于service的IP集群外不能访问,就是使用ingress方式再代理一次,即ingress代理service,service代理pod.

Ingress将开源的反向代理负载均衡器(如 Nginx、Apache、Haproxy等)与k8s进行集成,并可以动态的更新Nginx配置等,是比较灵活,更为推荐的暴露服务的方式,但也相对比较复杂

Ingress基本原理图如下:

部署nginx-ingress-controller:

下载nginx-ingress-controller配置文件:

[root@master ~]# wget https://raw.githubusercontent.com/kubernetes/ingress-nginx/nginx-0.21.0/deploy/mandatory.yaml

修改镜像路径:

#替换镜像路径

vim mandatory.yaml

......

#image: quay.io/kubernetes-ingress-controller/nginx-ingress-controller:0.21.0

image: willdockerhub/nginx-ingress-controller:0.21.0

......

执行yaml文件部署

[root@master ~]# kubectl apply -f mandatory.yaml

namespace/ingress-nginx created

configmap/nginx-configuration created

serviceaccount/nginx-ingress-serviceaccount created

clusterrole.rbac.authorization.k8s.io/nginx-ingress-clusterrole created

role.rbac.authorization.k8s.io/nginx-ingress-role created

rolebinding.rbac.authorization.k8s.io/nginx-ingress-role-nisa-binding created

clusterrolebinding.rbac.authorization.k8s.io/nginx-ingress-clusterrole-nisa-binding created

deployment.extensions/nginx-ingress-controller created

创建Dashboard TLS证书:

[root@master ~]# mkdir -p /usr/local/src/kubernetes/certs

[root@master ~]# cd /usr/local/src/kubernetes

[root@master kubernetes]# openssl genrsa -des3 -passout pass:x -out certs/dashboard.pass.key 2048

Generating RSA private key, 2048 bit long modulus

..........+++

.................+++

e is 65537 (0x10001)

[root@master kubernetes]# openssl rsa -passin pass:x -in certs/dashboard.pass.key -out certs/dashboard.key

writing RSA key

[root@master kubernetes]# openssl req -new -key certs/dashboard.key -out certs/dashboard.csr -subj '/CN=kube-dashboard'

[root@master kubernetes]# openssl x509 -req -sha256 -days 365 -in certs/dashboard.csr -signkey certs/dashboard.key -out certs/dashboard.crt

Signature ok

subject=/CN=kube-dashboard

Getting Private key

[root@master kubernetes]# ls

certs

[root@master kubernetes]# tree certs/

certs/

├── dashboard.crt

├── dashboard.csr

├── dashboard.key

└── dashboard.pass.key

0 directories, 4 files

[root@master kubernetes]# rm certs/dashboard.pass.key

rm: remove regular file ‘certs/dashboard.pass.key’? y

[root@master kubernetes]# kubectl create secret generic kubernetes-dashboard-certs --from-file=certs -n kube-system

Error from server (AlreadyExists): secrets "kubernetes-dashboard-certs" already exists

[root@master kubernetes]# tree certs/

certs/

├── dashboard.crt

├── dashboard.csr

└── dashboard.key

0 directories, 3 files

[root@master kubernetes]# kubectl create secret generic kubernetes-dashboard-certs --from-file=certs -n kube-system

Error from server (AlreadyExists): secrets "kubernetes-dashboard-certs" already exists

[root@master kubernetes]# kubectl create secret generic kubernetes-dashboard-certs1 --from-file=certs -n kube-system

secret/kubernetes-dashboard-certs1 created

创建ingress规则:

文件末尾添加tls配置项即可:

[root@master kubernetes]# vim kubernetes-dashboard-ingress.yaml

apiVersion: extensions/v1beta1

kind: Ingress

metadata:

labels:

k8s-app: kubernetes-dashboard

annotations:

kubernetes.io/ingress.class: "nginx"

https://github.com/kubernetes/ingress-nginx/blob/master/docs/user-guide/nginx-configuration/annotations.md

nginx.ingress.kubernetes.io/ssl-redirect: "true"

nginx.ingress.kubernetes.io/ssl-passthrough: "true"

name: kubernetes-dashboard

namespace: kube-system

spec:

rules:

- host: dashboard.host.com

http:

paths:

- path: /

backend:

servicePort: 443

serviceName: kubernetes-dashboard

tls:

- hosts:

- dashboard.host.com

secretName: kubernetes-dashboard-certs

访问这个域名: dashboard.host.com

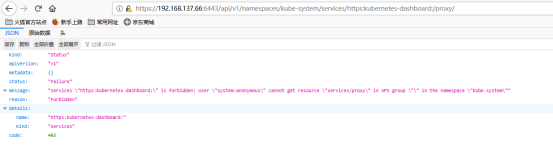

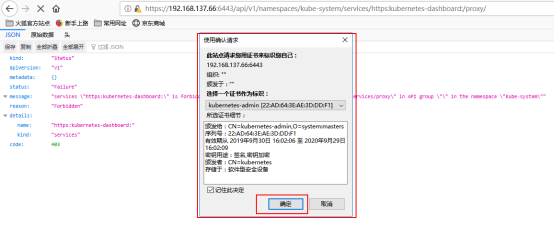

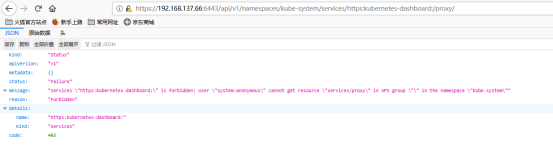

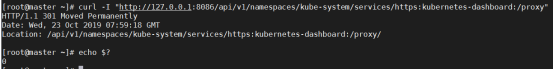

API Server方式(建议采用这种方式)

->如果Kubernetes API服务器是公开的,并可以从外部访问,那我们可以直接使用API Server的方式来访问,也是比较推荐的方式

如果Kubernetes API服务器是公开的,并可以从外部访问,那我们可以直接使用API Server的方式来访问,也是比较推荐的方式。

Dashboard的访问地址为:

https://<master-ip>:<apiserver-port>/api/v1/namespaces/Dashboard_NameSpacesNname/services/https:kubernetes-dashboard:/proxy/

https://<master-ip>:<apiserver-port>/api/v1/namespaces/Dashboard_NameSpacesNname/services/https:Dashboard_NameSpacesNname:/proxy/

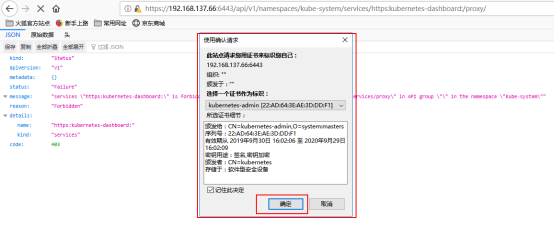

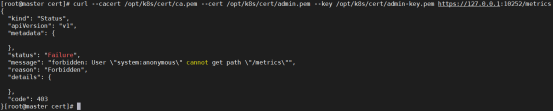

但是浏览器返回的结果可能如下:

这是因为最新版的k8s默认启用了RBAC,并为未认证用户赋予了一个默认的身份:anonymous。

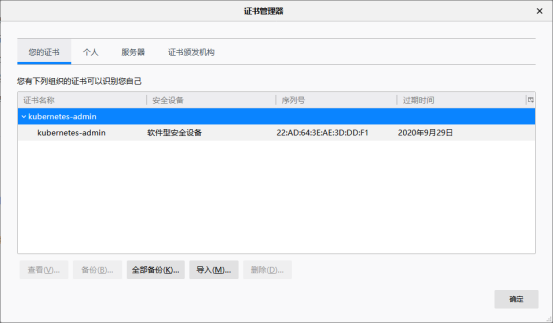

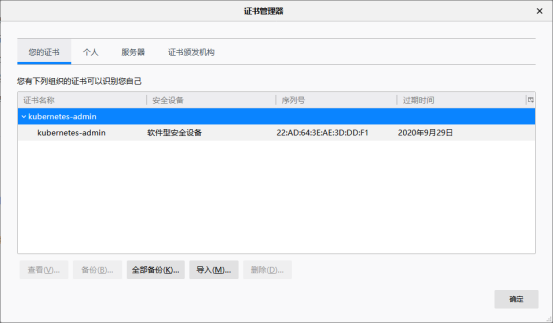

对于API Server来说,它是使用证书进行认证的,我们需要先创建一个证书:

我们使用client-certificate-data和client-key-data生成一个p12文件,可使用下列命令:

mkdir /dashboard

cd /dashboard

# 生成client-certificate-data

grep 'client-certificate-data' ~/.kube/config | head -n 1 | awk '{print $2}' | base64 -d >> kubecfg.crt

# 生成client-key-data

grep 'client-key-data' ~/.kube/config | head -n 1 | awk '{print $2}' | base64 -d >> kubecfg.key

# 生成p12

[root@master dashboard]# openssl pkcs12 -export -clcerts -inkey kubecfg.key -in kubecfg.crt -out kubecfg.p12 -name "kubernetes-client"

Enter Export Password:

Verifying - Enter Export Password:

[root@master ~]# ll -t

-rw-r--r--. 1 root root 2464 Oct 2 15:19 kubecfg.p12

-rw-r--r--. 1 root root 1679 Oct 2 15:18 kubecfg.key

-rw-r--r--. 1 root root 1082 Oct 2 15:18 kubecfg.crt

最后导入上面生成的kubecfg.p12文件,重新打开浏览器,显示如下:(不知道怎么导入证书,自己百度)

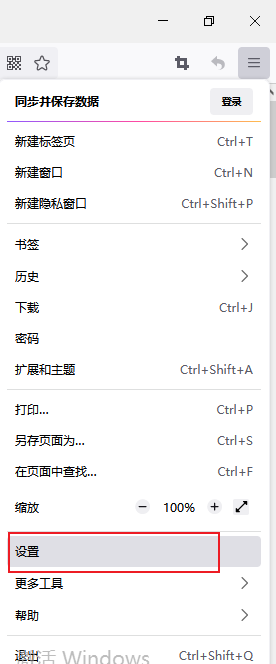

浏览器的设置->搜索证书

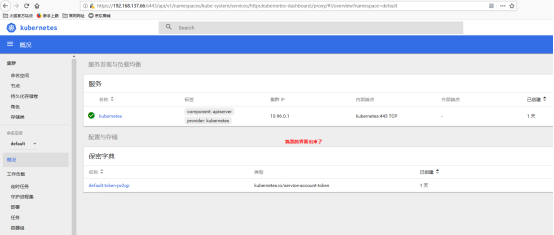

点击确定,便可以看到熟悉的登录界面了: 我们可以使用一开始创建的admin-user用户的token进行登录,一切OK

再次访问浏览器会弹出下面信息,点击确定

然后进入登录界面,选择令牌:

输入token,进入登录:

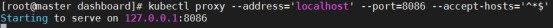

Porxy方式

如果要在本地访问dashboard,可运行如下命令:

$ kubectl proxy

Starting to serve on 127.0.0.1:8001

现在就可以通过以下链接来访问Dashborad UI:

http://localhost:8001/api/v1/namespaces/kube-system/services/https:kubernetes-dashboard:/proxy/

这种方式默认情况下,只能从本地访问(启动它的机器)。

我们也可以使用--address和--accept-hosts参数来允许外部访问:

$ kubectl proxy --address='0.0.0.0' --accept-hosts='^*$'

Starting to serve on [::]:8001

然后我们在外网访问以下链接:

http://

可以成功访问到登录界面,但是填入token也无法登录,这是因为Dashboard只允许localhost和127.0.0.1使用HTTP连接进行访问,而其它地址只允许使用HTTPS。因此,如果需要在非本机访问Dashboard的话,只能选择其他访问方式

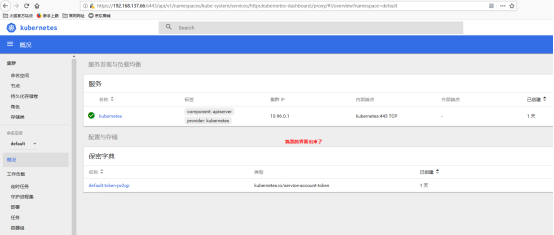

使用Dashboard

Dashboard 界面结构分为三个大的区域。

-

顶部操作区,在这里用户可以搜索集群中的资源、创建资源或退出。

-

左边导航菜单,通过导航菜单可以查看和管理集群中的各种资源。菜单项按照资源的层级分为两类:Cluster 级别的资源 ,Namespace 级别的资源 ,默认显示的是 default Namespace,可以进行切换:

-

中间主体区,在导航菜单中点击了某类资源,中间主体区就会显示该资源所有实例,比如点击 Pods

六、集群测试

1. 部署应用

1.1 通过命令方式部署

通过命令行方式部署apache服务,--replicas=3设置副本数3(在K8S v1.18.0以后,--replicas已弃用 ,推荐用 deployment 创建 pods)

[root@master ~]# kubectl run httpd-app --image=httpd --replicas=3

kubectl run --generator=deployment/apps.v1 is DEPRECATED and will be removed in a future version. Use kubectl run --generator=run-pod/v1 or kubectl create instead.

deployment.apps/httpd-app created

# kubectl delete pods httpd-app #删除pod

eg:应用创建

1.创建一个测试用的deployment:

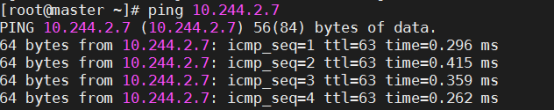

[root@master ~]# kubectl run net-test --image=alpine --replicas=2 sleep 360000

kubectl run --generator=deployment/apps.v1 is DEPRECATED and will be removed in a future version. Use kubectl run --generator=run-pod/v1 or kubectl create instead.

deployment.apps/net-test created

2.查看获取IP情况

[root@master ~]# kubectl get pod -o wide

3.测试联通性(在对应的node节点去测试)

1.2 通过配置文件方式部署nginx服务

cat > nginx.yml << EOF

apiVersion: extensions/v1beta1

kind: Deployment

metadata:

name: nginx

spec:

selector:

matchLabels:

app: nginx

replicas: 3

template:

metadata:

labels:

app: nginx

spec:

restartPolicy: Always

containers:

- name: nginx

image: nginx:latest

EOF

说明:在K8S v1.18.0以后,Deployment对于的apiVersion已经更改

查看apiVersion: kubectl api-versions

查看Kind,并且可以得到apiVersion与Kind的对应关系: kubectl api-resources

[root@master ~]# kubectl apply -f nginx.yml

deployment.extensions/nginx created

# kubectl describe deployments nginx #查看详情

# kubectl logs nginx-55649fd747-d86js #查看日志

# kubectl get pod #查看pod

NAME READY STATUS RESTARTS AGE

nginx-55649fd747-5dlxg 1/1 Running 0 2m6s

nginx-55649fd747-d86js 1/1 Running 0 2m6s

nginx-55649fd747-dqq46 1/1 Running 0 2m6s

# kubectl get pod -w #一直watch着!

[root@master ~]# kubectl get pods -o wide //查看所有的pods更详细些

可以看到nginx的3个副本pod均匀分布在2个node节点上,为什么没有分配在master上了,因为master上打了污点

# kubectl get rs #查看副本

NAME DESIRED CURRENT READY AGE

nginx-55649fd747 3 3 3 3m3s

#通过标签查看指定的pod

语法:kubectl get pods -l Labels -o wide

如何查看Labels:# kubectl describe deployment deployment_name

或者:kubectl get pod --show-labels

[root@master ~]# kubectl get pods -l app=nginx -o wide

[root@master ~]# kubectl get pods --all-namespaces || kubectl get pods -A #查看所以pod(不同namespace下的pod)

---------------------以上只是部署了,下面就暴露端口提供外部访问----------------

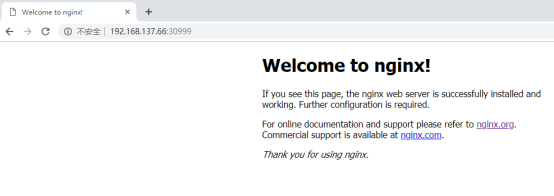

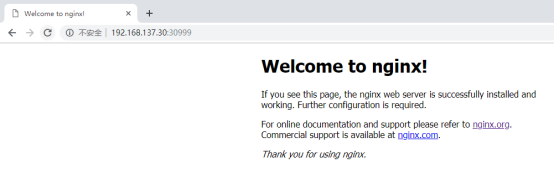

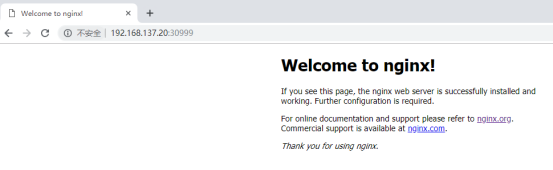

[root@master ~]# kubectl expose deployment nginx --port=80 --type=NodePort

service/nginx exposed

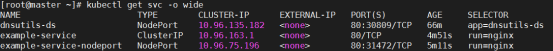

[root@master ~]# kubectl get services nginx || kubectl get svc nginx #service缩写为svc

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

nginx NodePort 10.109.227.43 <none> 80:30999/TCP 10s

说明:用NodePort方式把k8s集群的nginx services 的80端口通过kube-proxy映射到宿主机的30999,然后就可以在集群外部用:集群集群IP:30999访问服务了

[root@master ~]# netstat -nutlp| grep 30999

tcp 0 0 0.0.0.0:30999 0.0.0.0:* LISTEN 6334/kube-proxy

[root@node01 ~]# netstat -nutlp| grep 30999

tcp 0 0 0.0.0.0:30999 0.0.0.0:* LISTEN 6334/kube-proxy

# kubectl exec nginx-55649fd747-5dlxg -it -- nginx -v #查看版本

可以通过任意 CLUSTER-IP:Port 在集群内部访问这个服务:

[root@master ~]# curl -I 10.109.227.43:80

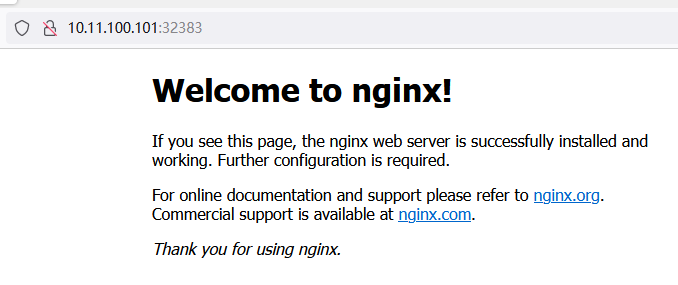

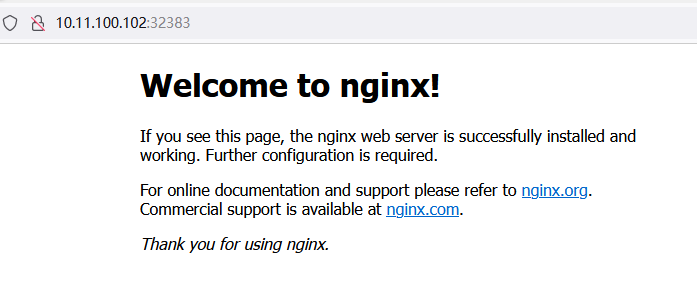

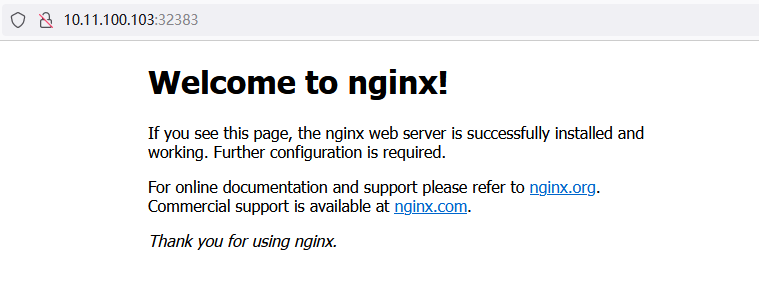

可以通过任意 NodeIP:Port 在集群外部访问这个服务:

[root@master ~]# curl -I 192.168.137.66:30999

[root@master ~]# curl -I 192.168.137.30:30999

[root@master ~]# curl -I 192.168.137.20:30999

访问master_ip:30999

访问Node01_ip:30999

访问Node02_ip:30999

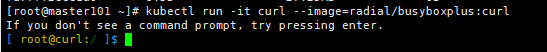

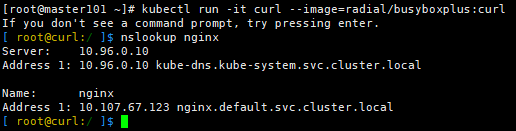

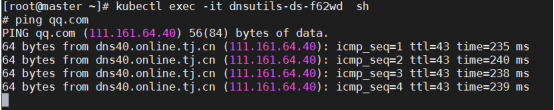

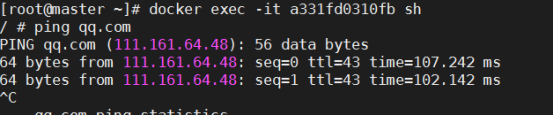

最后验证一下coredns(需要coredns服务正常), pod network是否正常:

运行Busybox并进入交互模式(busybox是一个很小的操作系统)

[root@master ~]# kubectl run -it curl --image=radial/busyboxplus:curl

If you don't see a command prompt, try pressing enter.

输入nslookup nginx查看是否可以正确解析出集群内的IP,以验证DNS是否正常

[ root@curl-66bdcf564-brhnk:/ ]$ nslookup nginx

Server: 10.96.0.10

Address 1: 10.96.0.10 kube-dns.kube-system.svc.cluster.local

Name: nginx

Address 1: 10.109.227.43 nginx.default.svc.cluster.local

说明: 10.109.227.43 :cluster_IP

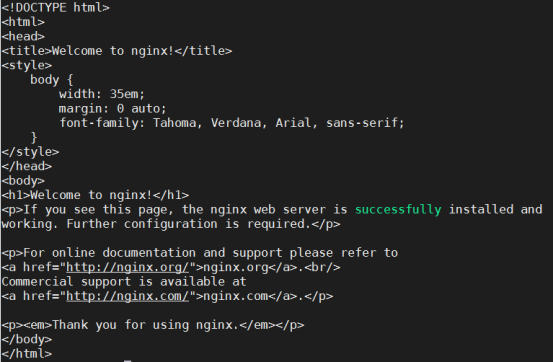

通过服务名进行访问,验证kube-proxy是否正常:

[ root@curl-66bdcf564-brhnk:/ ]$ curl http://nginx/

<!DOCTYPE html>

<html>

<head>

<title>Welcome to nginx!</title>

<style>

body {

width: 35em;

margin: 0 auto;

font-family: Tahoma, Verdana, Arial, sans-serif;

}

</style>

</head>

<body>

<h1>Welcome to nginx!</h1>

<p>If you see this page, the nginx web server is successfully installed and

working. Further configuration is required.</p>

<p>For online documentation and support please refer to

<a href="http://nginx.org/">nginx.org</a>.<br/>

Commercial support is available at

<a href="http://nginx.com/">nginx.com</a>.</p>

<p><em>Thank you for using nginx.</em></p>

</body>

</html>

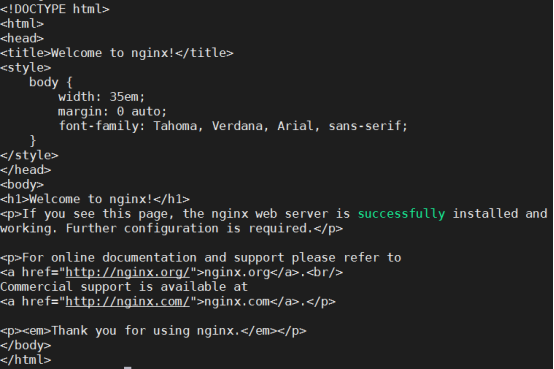

[ root@curl-66bdcf564-brhnk:/ ]$ wget -O- -q http://nginx:80/

<!DOCTYPE html>

<html>

<head>

<title>Welcome to nginx!</title>

<style>

html { color-scheme: light dark; }

body { width: 35em; margin: 0 auto;

font-family: Tahoma, Verdana, Arial, sans-serif; }

</style>

</head>

<body>

<h1>Welcome to nginx!</h1>

<p>If you see this page, the nginx web server is successfully installed and

working. Further configuration is required.</p>

<p>For online documentation and support please refer to

<a href="http://nginx.org/">nginx.org</a>.<br/>

Commercial support is available at

<a href="http://nginx.com/">nginx.com</a>.</p>

<p><em>Thank you for using nginx.</em></p>

</body>

</html>

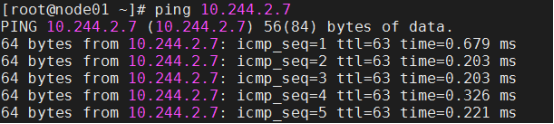

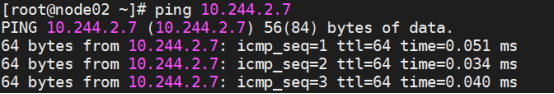

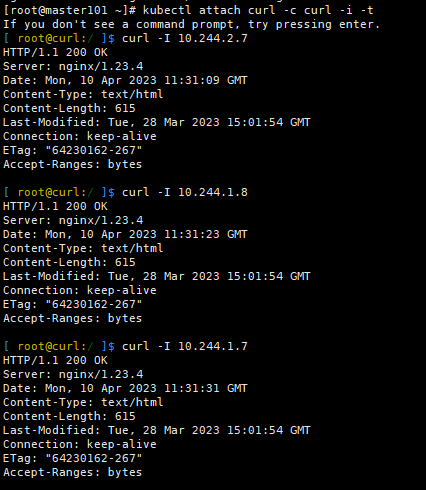

分别访问一下3个Pod的内网IP,验证跨Node的网络通信是否正常

[root@master ~]# kubectl get pod -o wide

[ root@curl-66bdcf564-brhnk:/ ]$ curl -I 10.244.2.5

HTTP/1.1 200 OK

[ root@curl-66bdcf564-brhnk:/ ]$ curl -I 10.244.1.4

HTTP/1.1 200 OK

[ root@curl-66bdcf564-brhnk:/ ]$ curl -I 10.244.2.6

HTTP/1.1 200 OK

删除相关操作

# kubectl delete svc nginx #删除svc

# kubectl delete deployments nginx #删除deploy

# kubectl delete -f nginx.yml #或者利用配置文件删除

# kubectl get svc #查看是否删除

# kubectl get deployments #查看是否删除

# kubectl get pod #查看是否删除

# kubectl get rs #查看副本

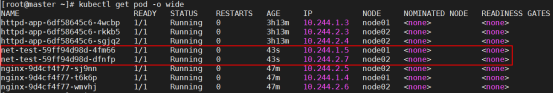

七、驱逐pod & 移除节点和集群

kubernetes集群移除节点

以移除node02节点为例,在Master节点上运行:

第一步:设置节点不可调度,即不会有新的pod在该节点上创建

kubectl cordon node02

设置完成后,该节点STATUS 将会多一个SchedulingDisabled的tag,表示配置成功。

然后开始对节点上的pod进行驱逐,迁移该pod到其他节点

[root@master ~]# kubectl get nodes

NAME STATUS ROLES AGE VERSION

master Ready master 3d v1.14.2

node01 Ready <none> 2d22h v1.14.2

node02 Ready,SchedulingDisabled <none> 2d v1.14.2

第二步:pod驱逐迁移

[root@master ~]# kubectl drain node02 --delete-local-data --force --ignore-daemonsets

node/node02 cordoned

WARNING: ignoring DaemonSet-managed Pods: kube-system/kube-flannel-ds-amd64-slbj7, kube-system/kube-proxy-h8ggq

evicting pod "kubernetes-dashboard-5d9599dc98-stgg2"

evicting pod "nginx-9d4cf4f77-sj9nn"

evicting pod "net-test-59ff94d98d-dfnfp"

evicting pod "httpd-app-6df58645c6-rkkb5"

evicting pod "httpd-app-6df58645c6-sgjq2"

evicting pod "nginx-9d4cf4f77-wmvhj"

pod/kubernetes-dashboard-5d9599dc98-stgg2 evicted

pod/httpd-app-6df58645c6-sgjq2 evicted

pod/nginx-9d4cf4f77-wmvhj evicted

pod/nginx-9d4cf4f77-sj9nn evicted

pod/httpd-app-6df58645c6-rkkb5 evicted

pod/net-test-59ff94d98d-dfnfp evicted

node/node02 evicted

参数说明:k8s Pod驱逐迁移

https://blog.51cto.com/lookingdream/2539526

--delete-local-data: 即使pod使用了emptyDir也删除。(Flag --delete-local-data has been deprecated, This option is deprecated and will be deleted. Use --delete-emptydir-data.)

--ignore-daemonsets: 忽略deamonset控制器的pod,如果不忽略,deamonset控制器控制的pod被删除后可能马上又在此节点上启动起来,会成为死循环;

--force: 不加force参数只会删除该NODE上由ReplicationController, ReplicaSet, DaemonSet,StatefulSet or Job创建的Pod,加了后还会删除’裸奔的pod’(没有绑定到任何replication controller)

第三步:观察pod重建情况后,对节点进行维护操作。维护结束后对节点重新配置可以调度。

kubectl uncordon node02

维护结束

说明:如果你是kubernetes集群移除节点就不能操作第三步,如果你是驱逐pod那么就不能操作第四步及以后的操作

第四步:移除节点

[root@master ~]# kubectl delete node node02

node "node02" deleted

[root@master ~]# kubectl get nodes

NAME STATUS ROLES AGE VERSION

master Ready master 3d v1.14.2

node01 Ready <none> 2d22h v1.14.2

第五步:上面两条命令执行完成后,在node02节点执行清理命令,重置kubeadm的安装状态:

[root@node02 ~]# kubeadm reset

[reset] WARNING: Changes made to this host by 'kubeadm init' or 'kubeadm join' will be reverted.

[reset] Are you sure you want to proceed? [y/N]: y

[preflight] Running pre-flight checks

W1003 16:32:34.505195 82844 reset.go:234] [reset] No kubeadm config, using etcd pod spec to get data directory

[reset] No etcd config found. Assuming external etcd

[reset] Please manually reset etcd to prevent further issues

[reset] Stopping the kubelet service

[reset] unmounting mounted directories in "/var/lib/kubelet"

[reset] Deleting contents of stateful directories: [/var/lib/kubelet /etc/cni/net.d /var/lib/dockershim /var/run/kubernetes]

[reset] Deleting contents of config directories: [/etc/kubernetes/manifests /etc/kubernetes/pki]

[reset] Deleting files: [/etc/kubernetes/admin.conf /etc/kubernetes/kubelet.conf /etc/kubernetes/bootstrap-kubelet.conf /etc/kubernetes/controller-manager.conf /etc/kubernetes/scheduler.conf]

The reset process does not reset or clean up iptables rules or IPVS tables.

If you wish to reset iptables, you must do so manually.

For example:

iptables -F && iptables -t nat -F && iptables -t mangle -F && iptables -X

If your cluster was setup to utilize IPVS, run ipvsadm --clear (or similar)

to reset your system's IPVS tables.

在master上删除node并不会清理node02运行的容器,需要在删除节点上面手动运行清理命令

如果你想重新配置集群,使用新的参数重新运行kubeadm init或者kubeadm join即可

安装完成集群后清除yum安装aliyun的插件

# 修改插件配置文件

vim /etc/yum/pluginconf.d/fastestmirror.conf

# 把enabled=1改成0,禁用该插件

# 修改yum 配置文件

vim /etc/yum.conf

把plugins=1改为0,不使用插件

yum clean all

yum makecache

通过kudeadm方式在centos7上安装kubernetes v1.26.3集群

docker、Containerd ctr、crictl 区别

一、docker 和 containerd区别

- docker 由 docker-client ,dockerd,containerd,docker-shim,runc组成,所以containerd是docker的基础组件之一

- 从k8s的角度看,可以选择 containerd 或 docker 作为运行时组件:其中 containerd 调用链更短,组件更少,更稳定,占用节点资源更少。所以k8s后来的版本开始默认使用 containerd 。

- containerd 相比于docker , 多了 namespace 概念,每个 image 和 container 都会在各自的namespace下可见。

- 调用关系

- docker 作为 k8s 容器运行时,调用关系为:kubelet

-->dockershim (在 kubelet 进程中)-->dockerd--> containerd - containerd 作为 k8s 容器运行时,调用关系为:kubelet

-->cri plugin(在 containerd 进程中)-->containerd

| 命令 | docker | ctr(containerd) | crictl(kubernetes) |

|---|---|---|---|

| 查看运行的容器 | docker ps | ctr task ls/ctr container ls | crictl ps |

| 查看镜像 | docker images | ctr image ls | crictl images |

| 查看容器日志 | docker logs | 无 | crictl logs |

| 查看容器数据信息 | docker inspect | ctr container info | crictl inspect |

| 查看容器资源 | docker stats | 无 | crictl stats |

| 启动/关闭已有的容器 | docker start/stop | ctr task start/kill | crictl start/stop |

| 运行一个新的容器 | docker run | ctr run | 无(最小单元为pod) |

| 修改镜像标签 | docker tag | ctr image tag | 无 |

| 创建一个新的容器 | docker create | ctr container create | crictl create |

| 导入镜像 | docker load | ctr image import | 无 |

| 导出镜像 | docker save | ctr image export | 无 |

| 删除容器 | docker rm | ctr container rm | crictl rm |

| 删除镜像 | docker rmi | ctr image rm | crictl rmi |

| 拉取镜像 | docker pull | ctr image pull | ctictl pull |

| 推送镜像 | docker push | ctr image push | 无 |

| 在容器内部执行命令 | docker exec | 无 | crictl exec |

二、ctr 和 crictl 命令区分

- ctr 是 containerd 自带的CLI命令行工具。不支持 build,commit 镜像,使用ctr 看镜像列表就需要加上-n 参数指定命名空间。

ctr -n=k8s.io image ls - crictl 是 k8s中CRI(容器运行时接口) 兼容的容器运行时命令行客户端,可以使用它来检查和调试 k8s 节点上的容器运行时和应用程序,k8s使用该客户端和containerd进行交互。

ctr -v输出的是 containerd 的版本,crictl -v输出的是当前 k8s 的版本,从结果显而易见你可以认为 crictl 是用于 k8s 的。

# crictl -v

crictl version v1.26.0

# ctr -v

ctr containerd.io 1.6.19

注:一般来说你某个主机安装了 k8s 后,命令行才会有 crictl 命令。而 ctr 是跟 k8s 无关的,你主机安装了containerd 服务后就可以操作 ctr 命令。

containerd 相比于docker , 多了namespace概念,每个image和container 都会在各自的namespace下可见。

ctr 客户端 主要区分了 3 个命名空间分别是k8s.io、moby和default,目前k8s会使用k8s.io作为命名空间.我们用crictl操作的均在k8s.io命名空间,使用ctr 看镜像列表就需要加上-n参数。crictl 是只有一个k8s.io命名空间,但是没有-n参数.

一、每台机器安装containerd

docker跟containerd不冲突,docker是为了能基于dockerfile构建镜像

# 添加docker源

curl -L -o /etc/yum.repos.d/docker-ce.repo https://mirrors.aliyun.com/docker-ce/linux/centos/docker-ce.repo

# 安装containerd

yum install -y containerd.io

# 创建默认配置文件

containerd config default > /etc/containerd/config.toml

# 修改sandbox_image的前缀为阿里云前缀地址,不设置会连接不上。根据实际情况修改

sed -i "s#registry.k8s.io/pause#registry.aliyuncs.com/google_containers/pause#g" /etc/containerd/config.toml

# 设置驱动为systemd

sed -i 's/SystemdCgroup = false/SystemdCgroup = true/g' /etc/containerd/config.toml

# 设置containerd地址为aliyun镜像地址

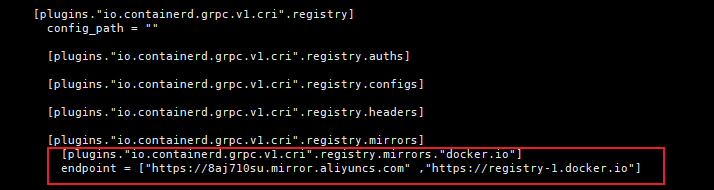

vi /etc/containerd/config.toml #注意,配置文件有格式要求。实测配置后报错

# 文件内容为

[plugins."io.containerd.grpc.v1.cri".registry]

config_path = ""

[plugins."io.containerd.grpc.v1.cri".registry.auths]

[plugins."io.containerd.grpc.v1.cri".registry.configs]

[plugins."io.containerd.grpc.v1.cri".registry.headers]

[plugins."io.containerd.grpc.v1.cri".registry.mirrors]

[plugins."io.containerd.grpc.v1.cri".registry.mirrors."docker.io"]

endpoint = ["https://docker.mirrors.ustc.edu.cn","http://hub-mirror.c.163.com"]

[plugins."io.containerd.grpc.v1.cri".x509_key_pair_streaming]

tls_cert_file = ""

tls_key_file = ""

# 配置containerd镜像加速器

sed -i 's@config_path = ""@config_path = "/etc/containerd/certs.d"@g' /etc/containerd/config.toml

mkdir /etc/containerd/certs.d/docker.io/ -p

cat >/etc/containerd/certs.d/docker.io/hosts.toml <<EOF

[host."https://dbxvt5s3.mirror.aliyuncs.com",host."https://registry.docker-cn.com"]

capabilities = ["pull"]

EOF

# 重启服务

systemctl daemon-reload

systemctl enable --now containerd

systemctl restart containerd

systemctl status containerd

# 是否安装成功

# ctr -v

ctr containerd.io 1.6.19

# runc -v

runc version 1.1.4

commit: v1.1.4-0-g5fd4c4d

spec: 1.0.2-dev

go: go1.19.7

libseccomp: 2.3.1

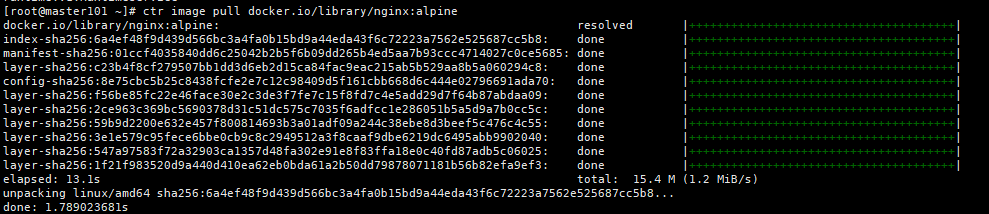

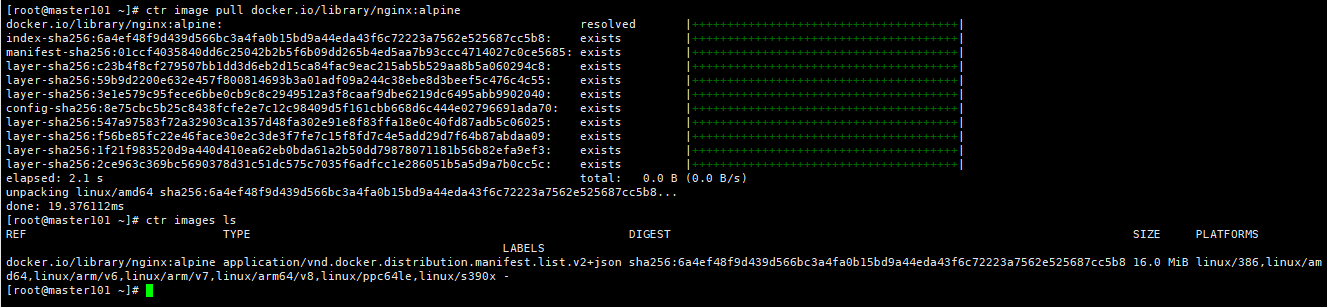

# ctr image pull docker.io/library/nginx:alpine #拉取镜像

docker.io/library/nginx:alpine: resolved |++++++++++++++++++++++++++++++++++++++|

index-sha256:6a4ef48f9d439d566bc3a4fa0b15bd9a44eda43f6c72223a7562e525687cc5b8: done |++++++++++++++++++++++++++++++++++++++|

manifest-sha256:01ccf4035840dd6c25042b2b5f6b09dd265b4ed5aa7b93ccc4714027c0ce5685: done |++++++++++++++++++++++++++++++++++++++|

layer-sha256:c23b4f8cf279507bb1dd3d6eb2d15ca84fac9eac215ab5b529aa8b5a060294c8: done |++++++++++++++++++++++++++++++++++++++|

config-sha256:8e75cbc5b25c8438fcfe2e7c12c98409d5f161cbb668d6c444e02796691ada70: done |++++++++++++++++++++++++++++++++++++++|

layer-sha256:f56be85fc22e46face30e2c3de3f7fe7c15f8fd7c4e5add29d7f64b87abdaa09: done |++++++++++++++++++++++++++++++++++++++|

layer-sha256:2ce963c369bc5690378d31c51dc575c7035f6adfcc1e286051b5a5d9a7b0cc5c: done |++++++++++++++++++++++++++++++++++++++|

layer-sha256:59b9d2200e632e457f800814693b3a01adf09a244c38ebe8d3beef5c476c4c55: done |++++++++++++++++++++++++++++++++++++++|

layer-sha256:3e1e579c95fece6bbe0cb9c8c2949512a3f8caaf9dbe6219dc6495abb9902040: done |++++++++++++++++++++++++++++++++++++++|

layer-sha256:547a97583f72a32903ca1357d48fa302e91e8f83ffa18e0c40fd87adb5c06025: done |++++++++++++++++++++++++++++++++++++++|

layer-sha256:1f21f983520d9a440d410ea62eb0bda61a2b50dd79878071181b56b82efa9ef3: done |++++++++++++++++++++++++++++++++++++++|

elapsed: 13.1s total: 15.4 M (1.2 MiB/s)

unpacking linux/amd64 sha256:6a4ef48f9d439d566bc3a4fa0b15bd9a44eda43f6c72223a7562e525687cc5b8...

done: 1.789023681s

# ctr images ls

REF TYPE DIGEST SIZE PLATFORMS LABELS

docker.io/library/nginx:alpine application/vnd.docker.distribution.manifest.list.v2+json sha256:6a4ef48f9d439d566bc3a4fa0b15bd9a44eda43f6c72223a7562e525687cc5b8 16.0 MiB linux/386,linux/amd64,linux/arm/v6,linux/arm/v7,linux/arm64/v8,linux/ppc64le,linux/s390x -

# 安装crictl工具,其实在安装kubelet、kubectl、kubeadm的时候会作为依赖安装cri-tools

yum install -y cri-tools

# 生成配置文件

crictl config runtime-endpoint

# 编辑配置文件

cat << EOF | tee /etc/crictl.yaml

runtime-endpoint: "unix:///run/containerd/containerd.sock"

image-endpoint: "unix:///run/containerd/containerd.sock"

timeout: 10

debug: false

pull-image-on-create: false

disable-pull-on-run: false

EOF

# 重启containerd

systemctl restart containerd

# crictl images

IMAGE TAG IMAGE ID SIZE

二、k8s安装准备工作

安装Centos是已经禁用了防火墙和selinux并设置了阿里源。master和node节点都执行本部分操作

1. 配置主机

1.1 修改主机名(主机名不能有下划线)

[root@centos7 ~]# hostnamectl set-hostname master

[root@centos7 ~]# cat /etc/hostname

master

退出重新登陆即可显示新设置的主机名master

1.2 修改hosts文件

[root@master ~]# cat >> /etc/hosts << EOF

192.168.137.66 master

192.168.137.30 node01

192.168.137.20 node02

EOF

2. 同步系统时间

$ yum -y install ntpdate chrony && systemctl enable chronyd && systemctl start chronyd && ntpdate time1.aliyun.com

vi /etc/chrony.conf #添加阿里云的时间服务器地址

# Use public servers from the pool.ntp.org project.

# Please consider joining the pool (http://www.pool.ntp.org/join.html).

server ntp1.aliyun.com iburst minpoll 4 maxpoll 10

server ntp2.aliyun.com iburst minpoll 4 maxpoll 10

server ntp3.aliyun.com iburst minpoll 4 maxpoll 10

server ntp4.aliyun.com iburst minpoll 4 maxpoll 10

server ntp5.aliyun.com iburst minpoll 4 maxpoll 10

server ntp6.aliyun.com iburst minpoll 4 maxpoll 10

server ntp7.aliyun.com iburst minpoll 4 maxpoll 10

systemctl restart chronyd

# 查看时间同步源状态

chronyc sourcestats -v

chronyc sources

centos7默认已启用chrony服务,执行chronyc sources命令,查看存在以*开头的行,说明已经与NTP服务器时间同步

$ crontab -e #写入定时任务

1 */2 * * * /usr/sbin/ntpdate time1.aliyun.com

3. 验证mac地址uuid

[root@master ~]# cat /sys/class/net/ens33/address

[root@master ~]# cat /sys/class/dmi/id/product_uuid

保证各节点mac和uuid唯一

4. 禁用swap

为什么要关闭swap交换分区?

Swap是交换分区,如果机器内存不够,会使用swap分区,但是swap分区的性能较低,k8s设计的时候为了能提升性能,默认是不允许使用交换分区的。Kubeadm初始化的时候会检测swap是否关闭,如果没关闭,那就初始化失败。如果不想要关闭交换分区,安装k8s的时候可以指定--ignore-preflight-errors=Swap来解决

解决主机重启后kubelet无法自动启动问题:https://www.hangge.com/blog/cache/detail_2419.html

由于K8s必须保持全程关闭交换内存,之前我安装是只是使用swapoff -a 命令暂时关闭swap。而机器重启后,swap 还是会自动启用,从而导致kubelet无法启动

3.1 临时禁用

[root@master ~]# swapoff -a

3.2 永久禁用

若需要重启后也生效,在禁用swap后还需修改配置文件/etc/fstab,注释swap

[root@master ~]# sed -i.bak '/swap/s/^/#/' /etc/fstab

或者修改内核参数,关闭swap

echo "vm.swappiness = 0" >> /etc/sysctl.conf

swapoff -a && swapon -a && sysctl -p

或者:Swap的问题:要么关闭swap,要么忽略swap

通过参数忽略swap报错

在kubeadm初始化时增加--ignore-preflight-errors=Swap参数,注意Swap中S要大写

kubeadm init --ignore-preflight-errors=Swap

另外还要设置/etc/sysconfig/kubelet参数

sed -i 's/KUBELET_EXTRA_ARGS=/KUBELET_EXTRA_ARGS="--fail-swap-on=false"/' /etc/sysconfig/kubelet

在以往老版本中是必须要关闭swap的,但是现在新版又多了一个选择,可以通过参数指定,忽略swap报错!

[root@elk-node-1 ~]# vim /etc/sysconfig/kubelet

KUBELET_EXTRA_ARGS="--fail-swap-on=false"

5. 关闭防火墙、SELinux

在每台机器上关闭防火墙:

① 关闭服务,并设为开机不自启

$ sudo systemctl stop firewalld

$ sudo systemctl disable firewalld

② 清空防火墙规则

sudo iptables -F && sudo iptables -X && sudo iptables -F -t nat && sudo iptables -X -t nat

sudo iptables -P FORWARD ACCEPT

-F 是清空指定某个 chains 内所有的 rule 设定

-X 是删除使用者自定 iptables 项目

1、关闭 SELinux,否则后续 K8S 挂载目录时可能报错 Permission denied :

$ sudo setenforce 0

2、修改配置文件,永久生效;

sed -i 's/^SELINUX=enforcing$/SELINUX=disabled/' /etc/selinux/config

6. 内核参数修改

https://zhuanlan.zhihu.com/p/374919190

开启 bridge-nf-call-iptables,如果 Kubernetes 环境的网络链路中走了 bridge 就可能遇到上述 Service 同节点通信问题,而 Kubernetes 很多网络实现都用到了 bridge。

启用 bridge-nf-call-iptables 这个内核参数 (置为 1),表示 bridge 设备在二层转发时也去调用 iptables 配置的三层规则 (包含 conntrack),所以开启这个参数就能够解决上述 Service 同节点通信问题,这也是为什么在 Kubernetes 环境中,大多都要求开启 bridge-nf-call-iptables 的原因。

RHEL / CentOS 7上的一些用户报告了由于iptables被绕过而导致流量路由不正确的问题

WARNING: bridge-nf-call-iptables is disabled

WARNING: bridge-nf-call-ip6tables is disabled

解决上面的警告:打开iptables内生的桥接相关功能,已经默认开启了,没开启的自行开启(1表示开启,0表示未开启)

# cat /proc/sys/net/bridge/bridge-nf-call-ip6tables

1

# cat /proc/sys/net/bridge/bridge-nf-call-iptables

1

4.1 自动开启桥接功能

临时修改

[root@master ~]# sysctl net.bridge.bridge-nf-call-iptables=1

net.bridge.bridge-nf-call-iptables = 1

[root@master ~]# sysctl net.bridge.bridge-nf-call-ip6tables=1

net.bridge.bridge-nf-call-ip6tables = 1

[root@master ~]# sysctl net.ipv4.ip_forward=1

net.ipv4.ip_forward = 1

或者用echo也行:

echo 1 > /proc/sys/net/bridge/bridge-nf-call-iptables

echo 1 > /proc/sys/net/bridge/bridge-nf-call-ip6tables

4.2 永久修改

[root@master ~]# cat <<EOF > /etc/sysctl.d/k8s.conf

net.bridge.bridge-nf-call-ip6tables = 1

net.bridge.bridge-nf-call-iptables = 1

net.ipv4.ip_forward = 1

EOF

[root@master ~]# sysctl -p /etc/sysctl.d/k8s.conf

net.ipv4.ip_forward = 1 #Docker从1.13版本开始调整了默认的防火墙规则,禁用了iptables filter表中FOWARD链,导致pod无法通信

# 应用 sysctl 参数而不重新启动

sudo sysctl --system

7. 加载ipvs相关模块

由于ipvs已经加入到了内核的主干,所以为kube-proxy开启ipvs的前提需要加载以下的内核模块:

ip_vs

ip_vs_rr

ip_vs_wrr

ip_vs_sh

nf_conntrack_ipv4

br_netfilter

在所有的Kubernetes节点执行以下脚本:

cat > /etc/sysconfig/modules/ipvs.modules <<EOF

#!/bin/bash

modprobe -- ip_vs

modprobe -- ip_vs_rr

modprobe -- ip_vs_wrr

modprobe -- ip_vs_sh

modprobe -- nf_conntrack_ipv4

modprobe -- br_netfilter

EOF

执行脚本

[root@master ~]# chmod 755 /etc/sysconfig/modules/ipvs.modules && bash /etc/sysconfig/modules/ipvs.modules && lsmod | grep -e ip_vs -e nf_conntrack_ipv4

上面脚本创建了/etc/sysconfig/modules/ipvs.modules文件,保证在节点重启后能自动加载所需模块。 使用lsmod | grep -e ip_vs -e nf_conntrack_ipv4命令查看是否已经正确加载所需的内核模块。

进行配置时会报错modprobe: FATAL: Module nf_conntrack_ipv4 not found.

这是因为使用了高内核,一般教程都是3.2的内核。在高版本内核已经把nf_conntrack_ipv4替换为nf_conntrack了

接下来还需要确保各个节点上已经安装了ipset软件包。 为了便于查看ipvs的代理规则,最好安装一下管理工具ipvsadm。

ipset是iptables的扩展,可以让你添加规则来匹配地址集合。不同于常规的iptables链是线性的存储和遍历,ipset是用索引数据结构存储,甚至对于大型集合,查询效率非常都优秀。

# yum install ipset ipvsadm -y

8. 设置kubernetes源(在阿里源)

6.1 新增kubernetes源

[root@master ~]# cat <<EOF > /etc/yum.repos.d/kubernetes.repo

[kubernetes]

name=Kubernetes

baseurl=https://mirrors.aliyun.com/kubernetes/yum/repos/kubernetes-el7-x86_64/

enabled=1

gpgcheck=1

repo_gpgcheck=1

gpgkey=https://mirrors.aliyun.com/kubernetes/yum/doc/yum-key.gpg https://mirrors.aliyun.com/kubernetes/yum/doc/rpm-package-key.gpg

EOF

解释:

[] 中括号中的是repository id,唯一,用来标识不同仓库

name 仓库名称,自定义

baseurl 仓库地址

enable 是否启用该仓库,默认为1表示启用

gpgcheck 是否验证从该仓库获得程序包的合法性,1为验证

repo_gpgcheck 是否验证元数据的合法性 元数据就是程序包列表,1为验证

gpgkey=URL 数字签名的公钥文件所在位置,如果gpgcheck值为1,此处就需要指定gpgkey文件的位置,如果gpgcheck值为0就不需要此项了

6.2 更新缓存

[root@master ~]# yum clean all

[root@master ~]# yum -y makecache

三、Master节点安装->对master节点也需要docker

完整的官方文档可以参考:

https://kubernetes.io/docs/setup/independent/create-cluster-kubeadm/

https://kubernetes.io/docs/reference/setup-tools/kubeadm/kubeadm-init/

Master节点端口

| 端口 | 用途 |

|---|---|

| 6443 | Kubernetes API server |

| 2379-2380 | etcd server client API |

| 10250 | kubelet API |

| 10251 | Kube-scheduler |

| 10252 | Kube-controller-manager |

1. 版本查看

[root@master ~]# yum list kubelet --showduplicates | sort -r

[root@master ~]# yum list kubeadm --showduplicates | sort -r

[root@master ~]# yum list kubectl --showduplicates | sort -r

目前最新版是

kubelet.x86_64 1.26.3-0 kubernetes

kubeadm.x86_64 1.26.3-0 kubernetes

kubectl.x86_64 1.26.3-0 kubernetes

2. 安装指定版本kubelet、kubeadm和kubectl

官方安装文档可以参考:https://kubernetes.io/docs/setup/independent/install-kubeadm/

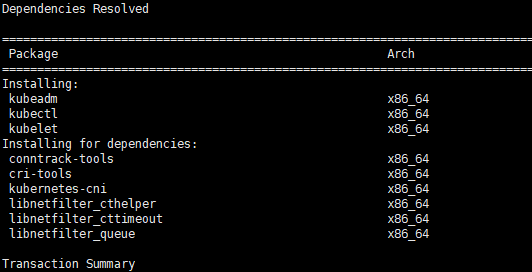

2.1 安装三个包

[root@master ~]# yum install -y kubelet-1.14.2 kubeadm-1.14.2 kubectl-1.14.2

若不指定版本直接运行

yum install -y kubelet kubeadm kubectl #默认安装最新版

ps:由于官网未开放同步方式, 可能会有索引gpg检查失败的情况, 这时请用 yum install -y --nogpgcheck kubelet kubeadm kubectl 安装

Kubelet的安装文件:

[root@elk-node-1 ~]# rpm -ql kubelet

/etc/kubernetes/manifests #清单目录

/etc/sysconfig/kubelet #配置文件

/usr/bin/kubelet #主程序

/usr/lib/systemd/system/kubelet.service #unit文件

2.2 安装包说明

-

kubelet 运行在集群所有节点上,用于启动Pod和containers等对象的工具,维护容器的生命周期

-

kubeadm 安装K8S工具,用于初始化集群,启动集群的命令工具

-

kubectl K8S命令行工具,用于和集群通信的命令行,通过kubectl可以部署和管理应用,查看各种资源,创建、删除和更新各种组件

2.3 配置并启动kubelet

配置启动kubelet服务

(1)修改配置文件

[root@master ~]# vim /etc/sysconfig/kubelet

KUBELET_EXTRA_ARGS="--fail-swap-on=false"

#KUBE_PROXY=MODE=ipvs

echo 'KUBELET_EXTRA_ARGS="--cgroup-driver=systemd"' > /etc/sysconfig/kubelet

(2)启动kubelet并设置开机启动:

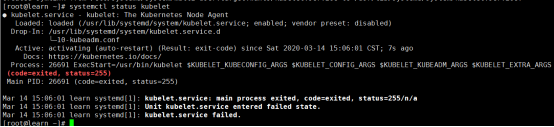

[root@master ~]# systemctl enable kubelet && systemctl start kubelet && systemctl enable --now kubelet

虽然启动失败,但是也得启动,否则集群初始化会卡死

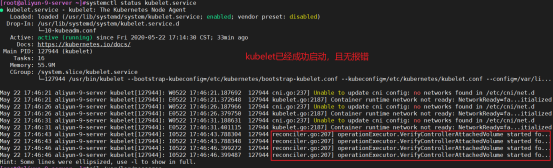

此时kubelet的状态,还是启动失败,通过journalctl -xeu kubelet能看到error信息;只有当执行了kubeadm init后才会启动成功。

因为K8S集群还未初始化,所以kubelet 服务启动不成功,下面初始化完成,kubelet就会成功启动,但是还是会报错,因为没有部署flannel网络组件

搭建集群时首先保证正常kubelet运行和开机启动,只有kubelet运行才有后面的初始化集群和加入集群操作。

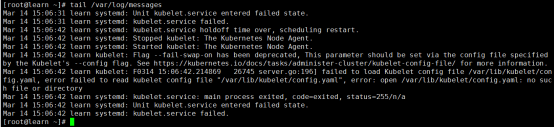

查找启动kubelet失败原因:查看启动状态

systemctl status kubelet

提示信息kubelet.service failed.

查看报错日志

tail /var/log/messages

2.4 kubectl命令补全

kubectl 主要是对pod、service、replicaset、deployment、statefulset、daemonset、job、cronjob、node资源的增删改查

# 安装kubectl自动补全命令包

[root@master ~]# yum install -y bash-completion

[root@master ~]# source /usr/share/bash-completion/bash_completion

[root@master ~]# source <(kubectl completion bash)

# 添加的当前shell

[root@master ~]# echo "source <(kubectl completion bash)" >> ~/.bash_profile

[root@master ~]# source ~/.bash_profile

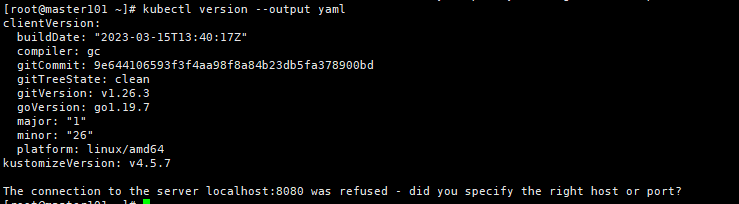

# 查看kubectl的版本:

[root@master101 ~]# kubectl version --output yaml

clientVersion:

buildDate: "2023-03-15T13:40:17Z"

compiler: gc

gitCommit: 9e644106593f3f4aa98f8a84b23db5fa378900bd

gitTreeState: clean

gitVersion: v1.26.3

goVersion: go1.19.7

major: "1"

minor: "26"

platform: linux/amd64

kustomizeVersion: v4.5.7

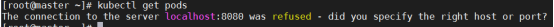

The connection to the server localhost:8080 was refused - did you specify the right host or port?

3. 下载镜像(建议采取脚本方式下载必须的镜像)

3.1 镜像下载的脚本

Kubernetes几乎所有的安装组件和Docker镜像都放在goolge自己的网站上,直接访问可能会有网络问题,这里的解决办法是从阿里云镜像仓库下载镜像,拉取到本地以后改回默认的镜像tag。

可以通过如下命令导出默认的初始化配置:

$ kubeadm config print init-defaults > kubeadm.yaml

如果将来出了新版本配置文件过时,则使用以下命令转换一下:更新kubeadm文件

# kubeadm config migrate --old-config kubeadm.yaml --new-config kubeadmnew.yaml

打开该文件查看,发现配置的镜像仓库如下:

imageRepository: registry.k8s.io

在国内该镜像仓库是连不上,可以用国内的镜像代替:

imageRepository: registry.aliyuncs.com/google_containers

采用国内镜像的方案,由于coredns的标签问题,会导致拉取coredns:v1.3.1拉取失败,这时候我们可以手动拉取,并自己打标签。

打开kubeadm.yaml,然后进行相应的修改,可以指定kubernetesVersion版本,pod的选址访问等。

apiVersion: kubeadm.k8s.io/v1beta3

bootstrapTokens:

- groups:

- system:bootstrappers:kubeadm:default-node-token

token: abcdef.0123456789abcdef

ttl: 24h0m0s

usages:

- signing

- authentication

kind: InitConfiguration

localAPIEndpoint:

advertiseAddress: 10.11.100.101 #控制节点IP

bindPort: 6443

nodeRegistration:

criSocket: unix:///var/run/containerd/containerd.sock #使用containerd作为容器运行时

imagePullPolicy: IfNotPresent

name: master101 #控制节点主机名

taints: null

---

apiServer:

timeoutForControlPlane: 4m0s

apiVersion: kubeadm.k8s.io/v1beta3

certificatesDir: /etc/kubernetes/pki

clusterName: kubernetes

controllerManager: {}

dns: {}

etcd:

local:

dataDir: /var/lib/etcd

imageRepository: registry.k8s.io #镜像仓库地址,如果提前准备好了镜像则不用修改.否则修改为可访问的地址.如aliyun的registry.cn-hangzhou.aliyuncs.com/google_containers

kind: ClusterConfiguration

kubernetesVersion: 1.26.3 #k8s版本

networking:

dnsDomain: cluster.local

podSubnet: 10.244.0.0/16 #Pod网段

serviceSubnet: 10.96.0.0/12 #Service网段

scheduler: {}

#kubelet cgroup配置为systemd

---

apiVersion: kubelet.config.k8s.io/v1beta1

kind: KubeletConfiguration

cgroupDriver: systemd

查看初始化集群时,需要拉的镜像名

[root@master101 ~]# kubeadm config images list

[root@master101 ~]# kubeadm config images list --kubernetes-version=v1.26.3

registry.k8s.io/kube-apiserver:v1.26.3

registry.k8s.io/kube-controller-manager:v1.26.3

registry.k8s.io/kube-scheduler:v1.26.3

registry.k8s.io/kube-proxy:v1.26.3

registry.k8s.io/pause:3.9

registry.k8s.io/etcd:3.5.6-0

registry.k8s.io/coredns/coredns:v1.9.3

kubernetes镜像拉取命令:

[root@master101 ~]# kubeadm config images pull --config=kubeadm.yaml #拉取镜像后还需要修改tag

[config/images] Pulled registry.aliyuncs.com/google_containers/kube-apiserver:v1.26.3

[config/images] Pulled registry.aliyuncs.com/google_containers/kube-controller-manager:v1.26.3

[config/images] Pulled registry.aliyuncs.com/google_containers/kube-scheduler:v1.26.3

[config/images] Pulled registry.aliyuncs.com/google_containers/kube-proxy:v1.26.3

[config/images] Pulled registry.aliyuncs.com/google_containers/pause:3.9

[config/images] Pulled registry.aliyuncs.com/google_containers/etcd:3.5.6-0

[config/images] Pulled registry.aliyuncs.com/google_containers/coredns:v1.9.3

=======================一般采取改方式下载镜像====================

# 或者用以下方式拉取镜像到本地

[root@master ~]# vim image.sh

#!/bin/bash

url=registry.cn-hangzhou.aliyuncs.com/google_containers

version=v1.26.3

images=(`kubeadm config images list --kubernetes-version=$version|awk -F '/' '{print $2}' | grep -v coredns`)

for imagename in ${images[@]} ; do

ctr -n k8s.io image pull $url/$imagename

ctr -n k8s.io images tag $url/$imagename registry.k8s.io/$imagename

ctr -n k8s.io images rm $url/$imagename

done

#采用国内镜像的方案,由于coredns的标签问题,会导致拉取coredns:v1.3.1拉取失败,这时候我们可以手动拉取,并自己打标签。

#another image

ctr -n k8s.io image pull $url/coredns:v1.9.3

ctr -n k8s.io images tag $url/coredns:v1.9.3 registry.k8s.io/coredns/coredns:v1.9.3

ctr -n k8s.io images rm $url/coredns:v1.9.3

解释:url为阿里云镜像仓库地址,version为安装的kubernetes版本

3.2 下载镜像

运行脚本image.sh,下载指定版本的镜像

[root@master ~]# bash image.sh

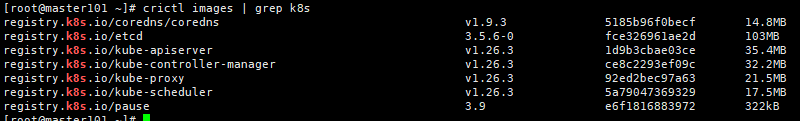

[root@master ~]# crictl images

IMAGE TAG IMAGE ID SIZE

registry.k8s.io/coredns/coredns v1.9.3 5185b96f0becf 14.8MB

registry.k8s.io/etcd 3.5.6-0 fce326961ae2d 103MB

registry.k8s.io/kube-apiserver v1.26.3 1d9b3cbae03ce 35.4MB

registry.k8s.io/kube-controller-manager v1.26.3 ce8c2293ef09c 32.2MB

registry.k8s.io/kube-proxy v1.26.3 92ed2bec97a63 21.5MB

registry.k8s.io/kube-scheduler v1.26.3 5a79047369329 17.5MB

registry.k8s.io/pause 3.9 e6f1816883972 322kB

### k8s.gcr.io 地址替换

将k8s.gcr.io替换为

registry.cn-hangzhou.aliyuncs.com/google_containers

或者

registry.aliyuncs.com/google_containers

或者

mirrorgooglecontainers

### quay.io 地址替换

将 quay.io 替换为

quay.mirrors.ustc.edu.cn

### gcr.io 地址替换

将 gcr.io 替换为 registry.aliyuncs.com

====================也可以通过dockerhub先去搜索然后pull下来============

[root@master ~]# cat image.sh

#!/bin/bash

images=(kube-apiserver:v1.15.1 kube-controller-manager:v1.15.1 kube-scheduler:v1.15.1 kube-proxy:v1.15.1 pause:3.1 etcd:3.3.10)

for imageName in ${images[@]}

do

docker pull mirrorgooglecontainers/$imageName && \

docker tag mirrorgooglecontainers/$imageName k8s.gcr.io/$imageName &&\

docker rmi mirrorgooglecontainers/$imageName

done

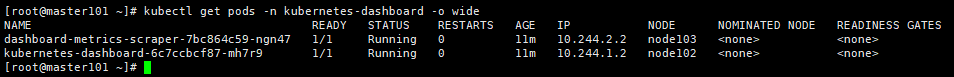

#another image